A harm reduction approach to improving peer review by acknowledging its imperfections

Abstract

This candid perspective written by scholars from diverse disciplinary backgrounds is intended to advance conversations about the realities of peer review and its inherent limitations. Trust in a process or institution is built slowly and can be destroyed quickly. Trust in the peer review process for scholarly outputs (i.e., journal articles) is being eroded by high-profile scandals, exaggerated news stories, exposés, corrections, retractions, and anecdotes about poor practices. Diminished trust in the peer review process has real-world consequences and threatens the uptake of critical scientific advances. The literature on “crises of trust” tells us that rebuilding diminished trust takes time and requires frank admission and discussion of problems, creative thinking that addresses rather than dismisses criticisms, and planning and enacting short- and long-term reforms to address the root causes of problems. This article takes steps in this direction by presenting eight peer review reality checks and summarizing efforts to address their weaknesses using a harm reduction approach, though we recognize that reforms take time and some problems may never be fully rectified. While some forms of harm reduction will require structural and procedural changes, we emphasize the vital role that training editors, reviewers, and authors has in harm reduction. Additionally, consumers of science need training about how the peer review process works and how to critically evaluate research findings. No amount of self-policing, transparency, or reform to peer review will eliminate all bad actors, unscrupulous publishers, perverse incentives that reward cutting corners, intentional deception, or bias. However, the scientific community can act to minimize the harms from these activities, while simultaneously (re)building the peer review process. A peer review system is needed, even if it is imperfect.

Peer review and the trust crisis

Trust is a complex psychological and social process (Lewis and Weigert 1985; Evans and Krueger 2009). Though it is difficult to define (Rousseau et al. 1998), one of the more common dictionary definitions of trust is a firm belief in the reliability, truth, ability, or strength of someone or something (see McKnight and Chervany (2001) for a typology of definitions). Trust has tremendous social benefits. High-trust societies tend to be more tolerant, have higher levels of social cohesion, and have higher subjective levels of well-being (Welch et al. 2005; Hudson 2006). Trust in other people (interpersonal trust) and institutions (institutional trust—including trust in experts and authorities) are both important for public discourse and democracy (Warren 2018). However, trust is fragile and asymmetrical, as it is built slowly but lost quickly (Lewicki and Brinsfield 2017; Cvitanovic et al. 2021). Moreover, “trust crises” are rarely rebuilt by time alone (Millstone and Van Zwanenberg 2000). Rebuilding trust takes intentional and difficult work, usually involving listening to critics, admitting problems, and having a willingness to enact real changes to demonstrate that the problems have been reduced or eliminated (Alexandre et al. 2013; Lewicki and Brinsfield 2017; Altenmüller et al. 2021).

Over the last few decades, trust and mistrust in science has received much attention (Wilkie 1996; Hendriks et al. 2016; Funk et al. 2019). We use the word “science” in the broadest sense to capture scholarly activities across all domains and not just the natural, physical, health, or social sciences. For a wide range of issues such as climate change (Hamilton et al. 2015; Lacey et al. 2018), vaccines (Black and Rappuoli 2010; Hamilton et al. 2015), or public health measures during pandemics (Kreps and Kriner 2020; Rutjens et al. 2021), trust in science is necessary to persuade the public to support initiatives intended to benefit people and the planet. There is evidence that trust in science is waning, fueled by extreme anti-science views that are shared and amplified by some politicians and celebrities (Tollefson 2020), and exacerbated by social (Huber et al. 2019) and conventional (Ophir and Jamieson 2021) media. The effects of media misinformation are so widespread that new terms have been invented to describe it, such as alternative facts, truthiness, and post-truth (Sismondo 2017; Vernon 2017; Wight 2018).

One aspect of science that has been implicated in the erosion of public trust is the peer review process used to assess the quality of articles submitted for publication in refereed journals (Ahmed 2021; Kharasch et al. 2021). In a 1999 editorial, the editor-in-chief of BMJ famously described peer review as “slow [authors' note—that is an especially problematic issue for early career researchers when it comes to grant applications or meeting the requirements for a graduate degree], expensive, highly subjective, prone to bias, easily abused, and poor at detecting gross defects.” Recent high-profile cases that involved fraud and associated retractions (Clark et al. 2016; Byrne 2019; Bucci et al. 2020; Ledford and Van Noorden 2020, Viglione 2020; Pennisi 2021), the presence of paper mills (Else and Van Noorden 2021; Sabel et al. 2023; United2Act 2024), spoof articles in predatory journals (Bohannon 2013; Grudniewicz et al. 2019), editorial or procedural incompetence and bias (Weller 2001; Wang et al. 2016; Horbach and Halffman 2019), and the replication crisis (Open Science Collaboration 2015; Baker 2016a; Guttinger and Love 2019) have negatively impacted trust in a process that is foundational to science (Rowland 2002). It is important to note that the erosion of trust is mainly in the general public; researchers continue to show a strong level of trust in peer review as a process to improve the quality of science and scientific publications (Kratz and Strasser 2015; Elsevier and Sense about Science 2019). However, despite researcher confidence in the peer review process, its effectiveness is difficult to assess (Jefferson et al. 2002; Bruce et al. 2016; Rennie 2016; Helmer et al. 2017; Lee and Moher 2017; Squazzoni et al. 2020; Squazzoni et al. 2021; Garcia-Costa et al. 2022) and the sheer number of articles has placed the contemporary peer review system under immense strain (Hanson et al. 2023). This makes it challenging to know what changes would improve the peer review system.

Peer review dates back hundreds of years (Spier 2002) and has maintained its current form since the 19th century. During most of that time, it has changed little, including an absence of mandatory training in peer review and editing. Peer review, and the publishing landscape, have seen more changes in the last two decades than they have for centuries (Ware 2005; Tennant 2018), including the replacement of print with online publishing (eliminating physical constraints on space in journals), new peer review models (aimed at reducing bias), preprints (making non-peer-reviewed science available fast), post-publication peer review (allowing for peer and public discourse), and open access journals (removing financial constraints on readers and placing that burden on authors). While well-intentioned, these initiatives are missing an overall framework to select the most effective and fair peer review models and to implement them. We propose such in the next section.

A pragmatic harm reduction approach to peer review

Given the public’s erosion of trust in science in general and peer review in particular, we apply a harm reduction approach (Roe 2005) to improving the quality of the peer review process partly because, if implemented, this will indirectly rebuild public trust in science. Harm reduction is sometimes thought to apply only to select and severe problems, such as minimizing the risks of substance use. However, the approach is more nuanced and widely applicable than this. Harm reduction is a policy approach based on the acknowledgment that recurring social problems are unlikely to be solved—and may in fact be exacerbated—by heavy-handed policies such as prohibition and punishment, as these tend to drive problematic behaviours underground (Marlatt 1996; Dea 2020). Instead, targeted interventions that seek to reduce bad outcomes in individual cases can reduce the overall scale and severity of the societal problem (Lenton and Single 1998; Hawk et al. 2017). A harm reduction approach to peer review would acknowledge that its problems are not fully resolvable (Roe 2005; Hawk et al. 2017; Lee and Moher 2017), as no manner nor amount of policy-procedure will entirely eliminate bad actors who engage in intentional deception, unscrupulous publishers, the perverse incentives that reward unethical behaviour, or unconscious bias. However, the scientific community can act to reduce the harms stemming from these behaviours by adopting practices that will reduce bad outcomes, indirectly (re)building public trust in the peer review process and, thereby, in science as a trusted source of information.

Although there has been much written about peer review, it is often approached from the perspective of a single discipline or journal, rather than considering more broadly the urgent issues that are universally relevant (e.g., De Vries et al. 2009). This perspective, written by scholars from diverse disciplinary backgrounds, is an invitation for more open conversations about the challenging realities of peer review and its inherent limitations, because a peer review (i.e., quality control) system is needed, even if it is imperfect (Resnick 2011). Taking a page from the crisis of trust literature, we argue that scholars and knowledge users need to be aware of and acknowledge the imperfections of peer review, while identifying and implementing changes that improve the process. Specifically, scientists should: (1) openly and explicitly identify and acknowledge the shortcomings of peer review, (2) be receptive and responsive to criticisms of the process (and of ourselves), and (3) have short- and long-term plans to evaluate peer review and minimize the impact of systemic structural problems. We identify eight shortcomings of peer review, including these systemic issues, and suggest ways to minimize them using a harm reduction perspective.

Peer review reality checks

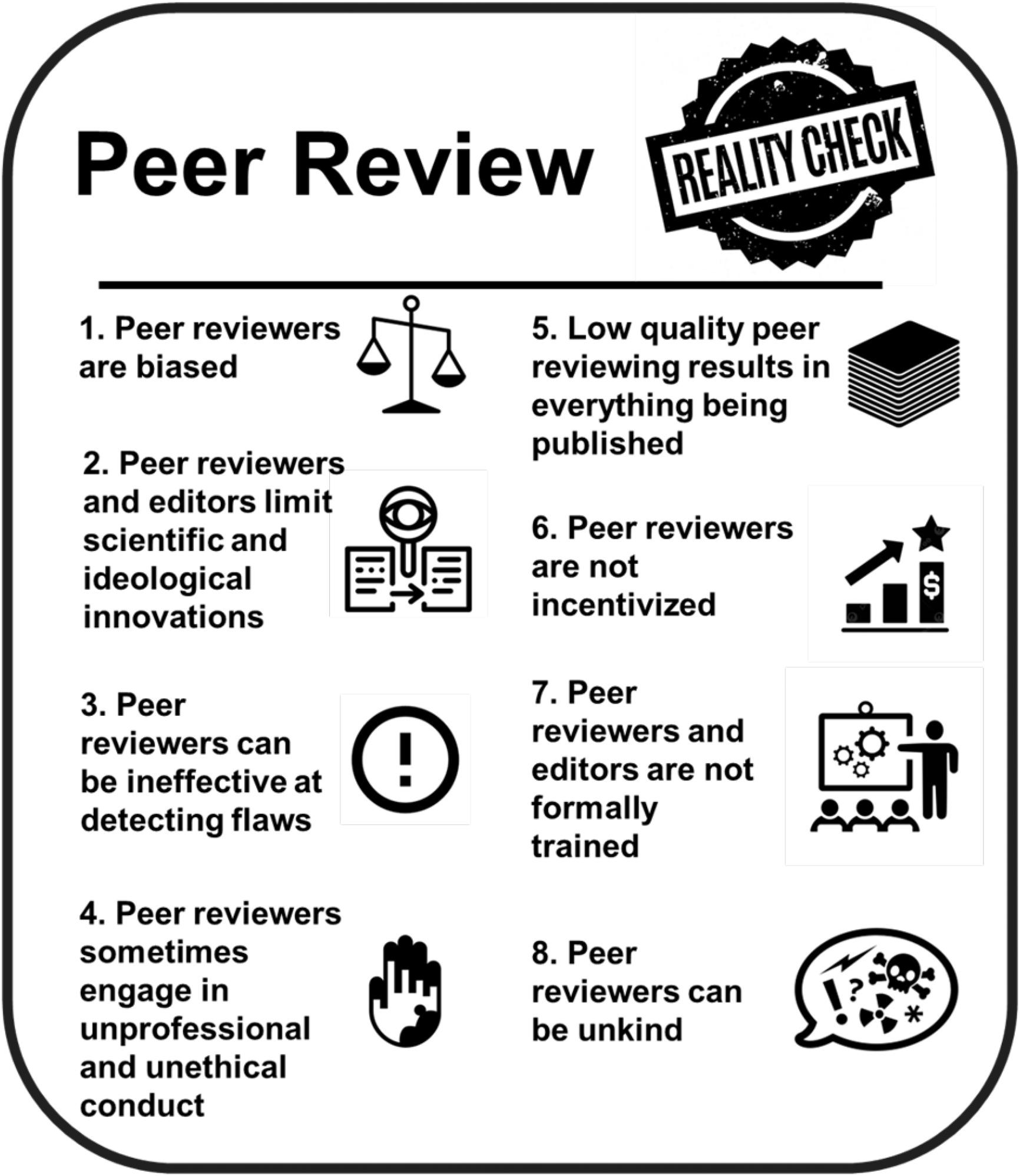

We present eight pre-publication peer review reality checks (see Fig. 1) to openly acknowledge the shortcomings of peer review and suggest ways to minimize these effects on trust in science, both amongst practitioners and in the general public.

Fig. 1.

1. Peer reviewers are biased. Humans are biased; therefore, their actions are biased, and any artificial systems trained by human reasoning will be as well. This affects the two main functions of peer review, which are to select which papers should be published and to improve them (Doctor et al. 2001; Smith 2010). This involves an initial filtering of submissions by journal editors and, for those manuscripts deemed worthy of pursuing, an external third-party quality assessment by experts. There are three main sources of bias that can occur during this process (Lee et al. 2013). First, reviews can be biased due to the characteristics and biases of the selected reviewers (Fox et al. 2017b), including their gender, discipline, and geographic or cultural background (Lee et al. 2013). Secondly, there can be a tendency of reviewers to accept papers that support their preferred hypotheses (confirmation bias) and that have positive outcomes (publication bias) (Browman 1999; Lee et al. 2013). To mitigate bias in reviewer selection, reviewers should come from diverse backgrounds; a greater diversity in perspectives should also reduce confirmation bias. Editors are tasked with being aware of publication bias in how they select papers and in assessing reviews, and should be trained to encourage evaluation of sound science over positive results (Resnik and Elmore 2016).

The third form of bias is the trickiest to address. It occurs because the perceptions and decisions of editors and reviewers can be biased due to their own biases and also due to an author’s prestige/status (Huber et al. 2022), institutional affiliation, race (Strauss et al. 2023), nationality, language, or gender (Lee et al. 2013). Various structural and procedural initiatives have emerged in an attempt to reduce these biases. Under double (reviewer and author) anonymity, journals hide the author’s identity to reduce harm from the unconscious biases of reviewers, which either reduces (McNutt et al. 1990; Blank 1991; Budden et al. 2008; Tomkins et al. 2017; Sun et al. 2022; Fox et al. 2023) or does not affect (Van Rooyen et al. 1999; Webb et al. 2008; Carlsson et al. 2012, Cassia-Silva et al. 2023) bias compared to having only single (reviewer) anonymity. None of these studies evaluate the level of experience of editors and reviewers, particularly their level of training in dealing with unconscious and conscious bias. Training is important to help overcome biases and reduce harm but should be implemented in conjunction with procedural changes, as training alone does not always change perspectives (Schroter et al. 2004; Bruce et al. 2016). In any recommendation that reduces harm, there is opportunity for people to cheat; technology (Bauersfeld et al. 2023), preprints (Rastogi et al. 2022; Sun et al. 2024), and even the increased use of code repositories (e.g., GitHub) and study registration or pre-registered reports (which are both otherwise positive developments) now make it easier to circumvent anonymity and discover an author’s identity. Open identities (Ross-Hellauer 2017), where author and reviewer identities are known but reviews are closed to the public, are meant to improve review quality and accountability and lead to reviews that are more constructive and thoughtfully worded, albeit evidence for those outcomes remains sparse. Open reports (Ross-Hellauer 2017) take it one step further, where the reviews and (usually) names of reviewers are publicly released with the published article. This transparency is meant to promote greater accountability of reviewers and authors to the wider community, as conflicts of interest are more obvious; readers can judge whether reviewer criticisms and author responses were appropriate, reviewers should be more conscientious, and quality reviews are incentivized because they can be used for career advancement (Walsh et al. 2000; Polka et al. 2018; Bravo et al. 2019). Open reports tend to have higher quality reviews than doubly anonymous ones (Bruce et al. 2016; Haffar et al. 2019), but this is not yet widely adopted (Tennant 2018) and more research is needed. Compared to single (reviewer) anonymity, it is possible that harm reduction could be achieved by implementing author anonymity for reviewer and editorial decisions or implementing signed reviews (Parmanne et al. 2023), and by making signed reviews public. However, open identities or reports can introduce other forms of harm. For example, if early career researchers decline to review manuscripts authored by established researchers because they are afraid of reprisal (Rodriguez-Bravo et al. 2017), this may reduce reviews with alternative viewpoints—and those who do reviews are already a subset of the available viewpoints (Kovanis et al. 2016). The evidence-base for how open review affects bias and quality is still slim (Ross-Helleur et al. 2023) and most studies focus on attitudes instead of outcomes (Ross-Helleuer and Horbach 2024), but at the moment the evidence shows either no change or positive effects for moving away from systems reliant on the known harms of single (reviewer) anonymity; further experimental studies are needed to fully assess this. Importantly, since it is the people doing the reviewing who introduce bias, no structural or procedural solution will completely eliminate it. While some forms of bias may be reduced by any given peer review model, others may be introduced. Thus, it may be premature to fully embrace any of the aforementioned approaches to harm reduction except in experimental contexts (i.e., to learn what works and what doesn’t). The most prudent, immediate opportunity for harm reduction is through training referees and editors in mitigating bias. In addition, journals could consider offering authors the option of choosing which peer review model they prefer to be applied in the assessment of their work.

2. Peer reviewers and editors limit scientific and ideological innovations. Academics face disincentives to pursue and publish research that goes against the dominant ideologies/theories in a field (Kempner et al. 2011). Peer review contributes to this, as reviewers and editors have the ability (intentionally or not) to enforce conformity with engrained perspectives and ideas (Hojat et al. 2003). The supposedly free field of academic inquiry is in fact highly patterned and uneven, with islands of intense attention to fashionable topics and perspectives within a sea of broader neglect (Frickel et al. 2010; Stephan 2012). Similarly, there is a growing recognition of the resistance to other sources of knowledge, for example Indigenous Knowledge (Loseto et al. 2020). Harm reduction here can be implemented if reviewers/editors are trained to evaluate papers based on soundness (regardless of whether they conform), to not require authors to change their content, interpretation, or language without compelling and well-supported justification (i.e., not based only on the reviewer’s or editor’s opinion), and to ensure that authors can disagree with reviewers/editors without fear of rejection.

3. Peer reviewers can be ineffective at detecting flaws. No form of peer review will detect all instances of unintentional error (Park et al. 2021) or overt misconduct (questionable research practices (Fraser et al. 2018) or outright fraud (Van Noorden 2014a)). This is because peer review relies on trusting that the author presented results that are reflective of how experiments were conducted and data were collected (Tennant and Ross-Hellauer 2020). Practices such as preregistration (registering data collection and analysis methods prior to a study) and publicly sharing materials that support reproducibility (open data and code; Culina et al. 2020) can reduce harm by facilitating detecting errors or misconduct earlier in the process (but see Berberi and Roche 2022), though how many reviewers actually check the data or code is unknown. Preregistration is now mandated for clinical trails (e.g., Government of Canada 2018; National Institutes of Health 2021), but adoption is not widespread (Nosek et al. 2018; Alayche et al. 2023). In ecology and evolution, 20% of journals now mandate open data as a condition of publication (Berberi and Roche 2022), yet few of these outlets require authors to share data with reviewers at submission. Harm is likely to be reduced through open data practices and their integration into the peer review process so that reviewers can better assess the quality of data in a study; however, this will increase the time required per review. Identifying which peer review procedures are best at flagging erroneous and fraudulent research is ongoing (Horbach and Halffman 2019) and training may need to occur in specific areas (Zheng et al. 2023). Recent innovations in automation, including software (e.g., statcheck; Baker 2016b, STM n.d.) and artificial intelligence (Price and Flach 2017), may be better and faster at detecting flaws in data analysis than humans, although it may be best suited for routine tasks such as verifying references (as is already done by some publishers; Schulz et al. 2022) and detection of image manipulation (Hosseini and Resnik 2024).

4. Peer reviewers sometimes engage in unprofessional and unethical conduct. Although it is rare, particularly in well-run journals (Smith 2006), unprofessional and unethical conduct unfortunately exists within the peer review process. Editors and reviewers can block (reject) or delay articles by competitors involved in similar research to control the narrative or to be the first to publish, and reviewers can submit unprofessional or dishonest reviews. In one study, nearly half of scientists had been asked by reviewers or editors to alter arguments in a way that contradicted their own scientific judgement (Shibayama and Baba 2015). More distressingly, many authors reported making such changes to ensure publication (see also Frey 2003; Tsang and Frey 2007). Similarly, citation coercion can occur when reviewers insist that their own papers are cited (McLeod 2021), or a journal requests that more articles from that journal are cited—sometimes under the threat of rejection (Wilhite and Fong 2012). Authors may behave unethically by submitting positive reviews of their own manuscripts using fictitious reviewers or friends (Ferguson et al. 2014; Haug 2015; Skórzewska-Amberg 2022). Unscrupulous editors and reviewers can steal ideas or plagiarize content (Rennie 2003; Smith 2006; Rennie 2016) though open peer review with timestamped submissions could help reduce this (Gipp et al. 2017). Harm is reduced by educating editors and reviewers about publication ethics, training editors to notice very fast turn-around times and generic email domains, and by empowering institutional research integrity offices to penalize bad actors (e.g., COPE 2011, 2017; Basil et al. 2023).

5. Low quality peer reviewing results in everything being published. Peer review is increasingly about determining where work is published rather than whether it is published (Peres-Neto 2016). A major contributing factor to this is the emergence of journals and publishers that are willing to publish anything for a fee (Ward 2016; Beall 2017; Strielkowski 2018; Grudniewicz et al. 2019; Cortegiani et al. 2020; Mills and Inouye 2021), including open access mega journals with limited scientific stringency or selectivity (Wakeling et al. 2017). Preprints and draft manuscripts posted on servers (e.g., arxiv.org; Van Schalkwyk et al. 2020) represent a high risk of harm approach to publishing because the results of research are made public whenever the authors decide, without any pre-publication review, and they can be difficult to distinguish from a peer-reviewed article, particularly for the public. Pre-prints can be an opportunity to refine a manuscript, IF they are peer reviewed. However, currently only a small percentage of preprints are peer reviewed mainly because there is no mechanism requiring such efforts nor (typically) any arbiters (i.e., editors) of such efforts (but see https://peercommunityin.org/ and https://prereview.org for examples of nascent developments in that sphere). While not everything published in “top tier” journals is of high quality and vice versa (Moher et al. 2017b), the probability of high quality papers differs among these categories (Happe 2020; Stephen 2024). Readers need to be taught to assess paper quality independent of where they are published (i.e., to apply Mertonian academic scepticism), as there is a wide range of peer review rigour across journals and across articles within a given journal because, as already noted, all editors and reviewers have their own perspectives, biases, and uncertainties (Barnett et al. 2021). The harm of poor reviewers/editors is reduced when there is more than one editor involved and they engage in stricter pre-screening, when at least three reviewers are used per paper, and when reviewer recommendations to accept must be unanimous (Neff and Olden 2006).

6. Peer reviewers are not incentivized. The scholarly peer review system relies almost entirely on volunteer labour, with billions of dollars worth of time donated yearly (Aczel et al. 2021). While reviewers are typically employed by research institutions, peer review is expected but is usually not explicitly part of their job description. The ask is not small: most reviewers evaluate several papers per year and typically spend 3–6 h per review, while “mega reviewers” assess over 100 papers per year (Ware and Mabe 2015; Rice et al. 2022). When this effort is not recognized, then participating (or not) in peer review does not affect career advancement (Bianchi et al. 2018). Given that most individuals in the research community are already over-committed and struggle with work-life balance, declining (or ignoring) peer review requests due to lack of time is common (e.g., Willis 2016; Sipior 2018; Stafford 2018) and has been increasing (Albert et al. 2016; Fox et al. 2017a). Peer review needs to be incentivized to reverse this trend and to increase the diversity of reviewers (Laxdal and Haugen 2024). Recognizing the contribution of reviewers will reduce harm and can be accomplished publicly (e.g., Publons or ORCID; Van Noorden 2014b; Hanson et al. 2016) by having reviewing count towards funding and promotion (Clausen and Nielsen 2003), offering payment to referees for their services (Lortie 2011; Brainard 2021), or by providing other incentives (e.g., discount or waiver for publishing fees in an open access journal or another product from a publisher) though this may decrease review quality (Squazzoni et al. 2013). In theory, allowing high quality peer reviews to count as contributing to scholarly performance will make the additional time involved in increasing the number of reviewers per article and having reviewers assess the quality of data easier to achieve (Ferris and Brumback 2010).

7. Peer reviewers and editors are not formally trained. In most professions that involve passing judgement on individuals (e.g., judges, law enforcement officers, professional sports referees), there is legislation or policy that dictates how these judgements take place. Failure to judge in an appropriate manner means loss of responsibilities or termination. While peer review is a central component of the scientific process, formal training or certification for peer reviewers or editors is not mandated by employers or journals, and the consequences of performing poorly are limited, except in the most extreme cases. Instead, training depends on having a willing mentor, but mentorship quality also varies, resulting in considerable variation in how scientists learn to evaluate manuscripts. Training can increase agreement among reviewers on a given manuscript (Strayhorn Jr et al. 1993), increase the probability of rejection (Schroter et al. 2004), and does not affect the time that reviewers take to evaluate manuscripts (Schroter et al. 2004). There are a growing number of resources and training programs available (COPE 2017; Galica et al. 2018; Foster et al. 2021a, 2021b; Willis et al. 2022; Buser et al. 2023; C4DISC n.d.), but these need to be tested for their effectiveness, and further research on the best types of training is necessary, as not all appear to be successful in leading to higher quality reviews (Callaham and Schriger 2002; Schroter et al. 2008; Bruce et al. 2016, but see Galipeau et al. (2015) and Lyons-Warren et al. (2024)). Harm caused by lack of training will be reduced by adopting the core competencies for editors and reviewers that are constantly being developed and updated (e.g., Moher et al. 2017a; COPE 2019; Proctor et al. 2023).

8. Peer reviewers can be unkind. The necessity to be critical when evaluating scientific work can easily express itself in unconstructive and harsh rather than supportive and collegial comments. This becomes a problem when demeaning comments or ad hominem attacks creep in to reviews. For example, in a survey of >1100 scientists, more than half said that they had received at least one unprofessional review in their career (Silbiger and Stubler 2019), and Gerwing et al. (2020) reported demeaning comments in 10%–35% of peer reviews in ecology and evolution and 43% in behavioural medicine. Harm will be eliminated at the source when reviewers learn to refrain from making demeaning comments directed to authors and instead use neutral or positive language directed at the science (Clements 2020; Parsons and Baglini 2021); signed reviews may help as anonymity is one reason reviewers bully authors (Comer and Schwartz 2014). Well trained and engaged editors can eliminate this harm from being passed on by redacting or editing reviewer text that is unkind (Gerwing et al. 2021) and calling out such behaviours; clear journal guidelines for these instances will help (e.g., COPE 2021).

Post-publication harm reduction approaches to address the imperfections of the peer review system

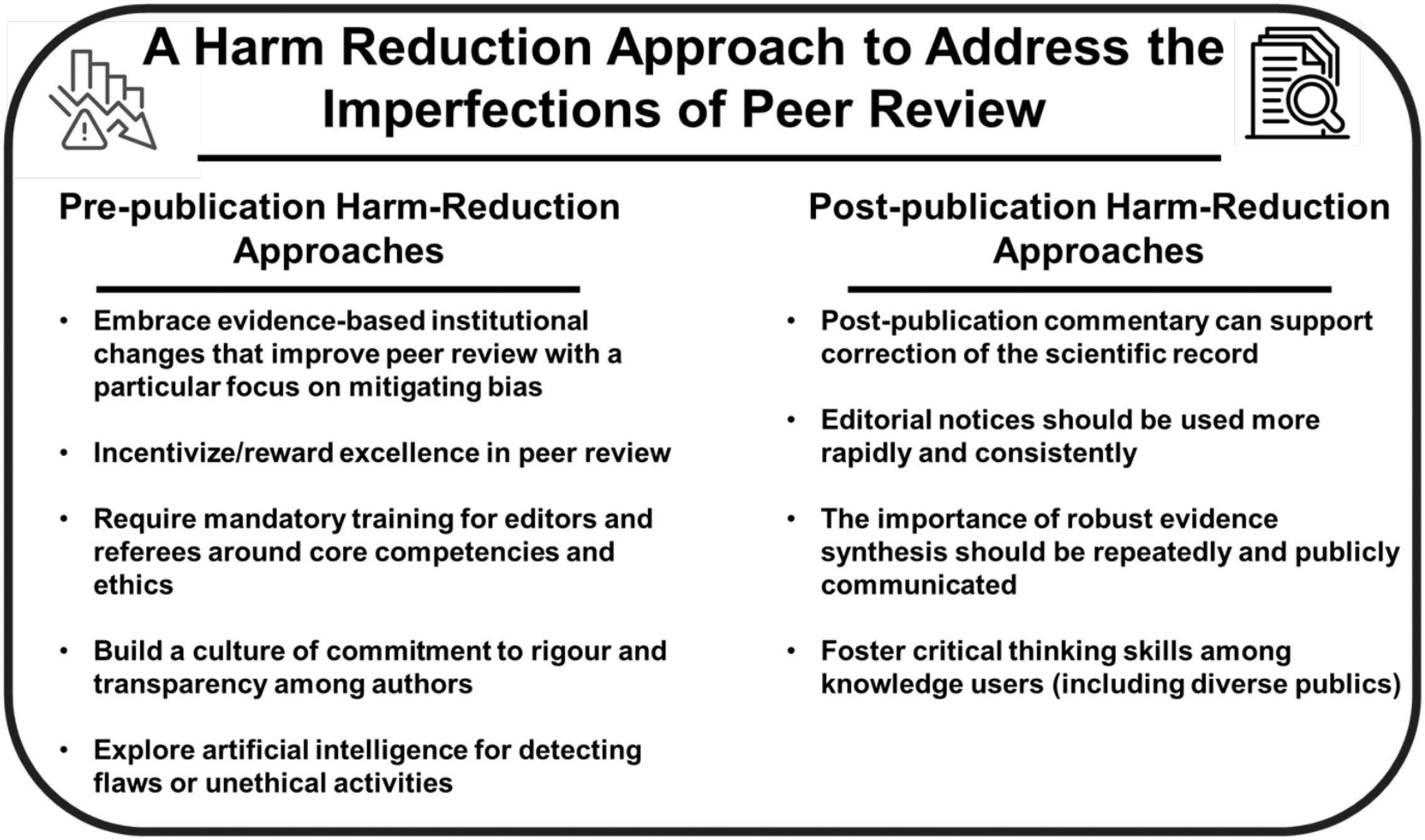

In addition to the initiatives described above, we advance the following four suggestions for the development of a harm reduction plan for instances when peer review by itself in insufficient (Fig. 2).

Fig. 2.

1. Post-publication commentary can support correction of the scientific record. When a paper is accepted by a journal, the formal peer review process has ended. Yet, with the advent of digital communication platforms, there are opportunities for “ad-hoc” peer review (by those who were not involved in the pre-publication peer review process) to continue after publication (Townsend 2013). The most common platform is PubPeer (https://pubpeer.com/ - which can be added as an extension on web browsers), where anyone can begin discussions (positive or negative) of any published paper, although it has been criticized for allowing anonymity (Torny 2018). However, there are other harms that could be introduced via such platforms, including personal attacks, targeted harassment, and vendettas against competitors. Some journals have also instituted post-publication review, but these have yet to become widely embraced. Post-publication critiques could be better fostered by journals training editors as curators, following guidelines from the Committee on Publication Ethics (COPE). Unfortunately, the willingness of some journals/publishers to enable such discourse is limited or simply not helpful if the journals lack legitimacy (e.g., predatory publishers). If these forums become the norm, some of the harms of pre-publication peer review—especially missing fundamental flaws—may be corrected post-publication: two-thirds of comments on PubPeer (Ortega 2021) and half on journal websites (Wakeling et al. 2020) were about the paper’s soundness. The imperfections in published studies can reveal what is needed to increase their quality, impacting future studies so that they are more likely to be reliable (Cooke et al. 2017). Some of the solutions already presented, such as open data sharing, will facilitate post-publication review, especially as data accessibility should outlive individual careers and lifetimes. The most obvious form of harm reduction is enhanced training of editors, referees, and authors on how to constructively engage in post-publication review such that those efforts are civilized and follow guidelines established by entities such as COPE.

2. Editorial notices should be used more rapidly and consistently. Editorial notices, in the form of notes, corrections, expressions of concern, or retractions, are mechanisms by which formal concerns about a paper can be made public (Noonan and Parrish 2008; Teixeira da Silva and Dobránszki 2017). In all instances where a post-publication critique identifies significant issues, such matters should be addressed by the journal editorial team in a timely manner. Editorial notices can be related to the validity of data, methods, or data interpretation, or issues related to manipulated or reused images (Vaught et al. 2017). Depending on the type of concern, it can be resolved by discussion with the author (i.e., determined to not be an issue) or it can result in action such as a correction, expression of concern, or retraction (Knoepfler 2015; Didier and Guaspare-Cartron 2018). Such notices can involve complexities and legal action, which may result in it taking years before notices are issued or cases resolved (Ortega 2021). Furthermore, unsound studies can continue to be used and cited incorrectly well after they are corrected (i.e., “zombie papers”; Binning et al. 2018; Brainard 2022). The potential harm of zombie papers and uncorrected science would be reduced by better procedures and standards for the use of retractions (https://publicationethics.org/retraction-guidelines; Teixeira da Silva and Dobránszki 2017) and by making the process more open and transparent (Teixeira da Silva and Yamada 2021) including better and more prominent linking of rebuttals to the original articles (Banobi et al. 2011; https://www.csescienceeditor.org/article/introducing-the-niso-crec-guidelines/).

3. The importance of robust evidence synthesis should be repeatedly and publicly communicated. Research is conducted by people, which means that imperfections will always be present (Olson 2008), while variation and uncertainty are also inherent properties of systems, making it difficult to achieve the level of certainty often desired by decision makers (Malnes 2006). Because there is no single standard for how to do research across all fields, it is unlikely that there will be consensus on what constitutes an “adequate” study. Too much reliance on a single or small number of empirical studies can be risky as they all have some form of weakness or limitation that influences their reliability and broader relevance. Evidence synthesis is useful for collating knowledge on a given topic to identify broad patterns and assess treatment effect sizes and uncertainty. There are many forms of evidence synthesis that themselves vary in rigour and robustness (Donnelly et al. 2018), with systematic reviews (including meta-analysis) following established practices (e.g., The Cochrane Collaboration, the Campbell Collaboration) being the gold standard (Gough et al. 2017). These have a critical appraisal phase where individual studies are evaluated relative to predetermined criteria (Burls 2009) and studies that are biased or flawed are either excluded or down weighted with justification given in a transparent manner. In other words, not all evidence is considered equal. This is the key harm reduction achieved by using evidence syntheses: readers are protected against the undue influence of a single paper which reduces the harm of the imperfect screening of the peer review process. These reviews inform policy and practice in several key sectors (e.g., health care, education, the environment) but could be more widely embraced in other knowledge domains. It is unclear how much the general public (and even some researchers) know about such syntheses, so we recommend that their purpose and utility be better communicated.

4. Foster development of critical thinking skills among all knowledge consumers. It takes a great deal of time, knowledge, and experience to read and understand a given study and to consider its strengths and weaknesses by applying Mertonian organized skepticism to it. Because of this, the peer-reviewed literature is put on a pedestal with cautions given against relying on other sources such as grey literature, preprints, blogs, or webpages. It is important that everyone who consumes scientific information (e.g., policy makers, industry professionals, the general public, and researchers) be trained to understand the complexities of knowledge generation so they can develop critical thinking skills to identify and weigh the reliability of any given piece of information irrespective of where it was sourced (Bailin 2002; Brewer and Gross 2003; Levinson 2006; Durbin 2009; Subramanyam 2013), and understand the importance of systematic reviews and meta-analyses. Harm would be reduced by building a culture within and beyond academia where we teach that limitations and biases are pervasive and often unconscious in science and elsewhere (Weaver 1961) and have them openly acknowledged by authors where possible (Sumpter et al. 2023), while implementing strategies to mitigate bias (through training and structural changes). Related to this recommendation is the opportunity to share positive examples of where peer review has been particularly useful (an idea raised by one of the anonymous referees for this paper) and identified errors that were addressed prior to publication. Peer review is often framed as inherently “negative” given our human tendency to focus on criticism; yet, constructive criticism is the whole point of peer review and can improve science and protect the scientific record, which is an entirely positive outcome.

Synthesis and a path forward

Peer review is foundational to the scientific enterprise and carries great weight within the scientific community (De Ridder 2022), policymaking (Oliver et al. 2014), legal and judicial proceedings (Chubin et al. 1995), journalism and media (Young and Dugas 2012), and activism (Fähnrich 2018). Acknowledging the fallibility of peer review systems and the people involved with them is an essential starting point to implementing a harm reduction approach and drive improvements.

A harm reduction approach recognizes peer review’s strengths while striving to minimize the problems that lead to scandal and mistrust by encouraging targeted interventions that seek to reduce bad outcomes in individual cases. The eight reality checks about peer review that we have presented are an attempt to do this. For each, we outlined steps to reduce the likelihood of harmful outcomes. In addition to the harm reduction that training will provide, we recommend that several institutional and procedural changes continue to be studied to provide more clarity on the extent to which they achieve the desired outcome and actually reduce harm. Those include continuing to evaluate the types of reviewer/author anonymity structures and perhaps moving away from single (reviewer) anonymity to making reviews open (transparent) and signed; having open data practices integrated into the peer review process; having more than one editor where each performs stricter pre-screening; having at least three reviewers per paper with a unanimous criterion for acceptance; selecting reviewers with diverse backgrounds; acknowledging that peer review is like other forms of mentorship in the scientific community and formally recognizing it for career credit; and making sure that bad actors face consequences. Public trust will be increased by encouraging post-publication commentary, making the process of editorial notices streamlined and transparent; making the process of whistleblowing safe and effective; and encouraging the use of evidence synthesis.

Training will reduce specific harms in peer review (Fig. 2) when reviewers and editors learn not to require authors to change their language without substantial justification and when authors have the opportunity to disagree without fear of rejection; to recognize and avoid citation coercion; to evaluate papers based on soundness instead of ideological conformity or positive results; and to refrain from making demeaning comments directed to authors. This sort of training will require the development of core competencies for editors and reviewers (e.g., https://webofscienceacademy.clarivate.com/learn; EASE 2024). There may be a need to incentivize training, or even make it mandatory (e.g., you are unable to submit or review articles for a collective of publishers without training) because voluntary attendance and motivation depends on socio-demographic factors. We note that specific training is also needed to reduce harm before peer review happens (Fig. 2). For example, only 20% of authors read the papers they cited (Simkin and Roychowdhury 2003) and 25% of citations were inappropriate (Todd et al. 2010), including the continued usage of studies known to be severely flawed (Binning et al. 2018; Berenbaum 2021).

Some initiatives will undoubtedly air more dirty laundry about the peer review process; however, the trust crisis literature tells us that instead of hiding in shame, we should be open about these flaws to (re)build public confidence in this process. Collaborative efforts are needed to test novel interventions targeting improvements in peer review, including collaborative peer review (Mehmani 2019). We should embrace observational analysis and experimentation (which requires the cooperation of publishers) to test procedural changes (Ross-Hellauer et al. 2023) and methods of training (Carter et al. 2020) and engage with stakeholders to work towards improved systems that address the flaws discussed throughout this paper (Lee and Moher 2017). From there, the scientific community, in collaboration with professional associations and publishers, can demonstrate their good faith by widely and consistently implementing the best procedural and training reforms to enhance transparency and reduce bias. This will be seen as a challenging gambit in some quarters, but in our view ignoring the problem by separating publication from peer review (Tennant et al. 2017) or seeking to “blow up” or go around peer review are riskier options that will result in more scandal. Efforts need to focus on how to improve it rather than abandoning it (Haffar et al. 2019). The hard and incremental work inspired by harm reduction approaches offers a way forward.

Today, we continue to face many challenges ranging from climate change to public health crises. The fact that peer review is imperfect should not hobble our ability to make decisions and address urgent issues based on science; instead, the urgency should drive us to do better. We need to acknowledge the uncertainty inherent in every study and the imperfections of peer review—a compelling reason for ensuring decision-makers are well-versed in the realities of peer review and they understand the value of evidence syntheses—and deal with the overwhelming need for more training. Developing the skills in critiquing research and encouraging conversations about strengths and weaknesses of the system and particular studies is critical and will require a cultural shift—for the better (Bastian 2014; Fig. 2).

Acknowledgements

We thank Michael Donaldson and two anonymous referees for valuable insights that improved the paper.

References

Aczel B., Szaszi B., Holcombe A.O. 2021. A billion-dollar donation: estimating the cost of researchers’ time spent on peer review. Research Integrity and Peer Review, 6: 1–8.

Ahmed S. 2021. Building trust in journals and in peer review: need of the hour during the COVID-19 pandemic. Rheumatology International, 41(2): 501–502.

Alayche M., Cobey K.D., Ng J.Y., Ardern C.L., Khan K.M., Chan A.-W., et al. 2023. Evaluating prospective study registration and result reporting of trials conducted in Canada from 2009 to 2019. FACETS, 8: 1–10.

Albert A.Y., Gow J.L., Cobra A., Vines T.H. 2016. Is it becoming harder to secure reviewers for peer review? A test with data from five ecology journals. Research integrity and peer review, 1(1): 1–8.

Alexandre M., Willman A., Aslam G., Rebosio M., Balasuriya K. 2013. Societal Dynamics and Fragility: Engaging Societies in Responding to Fragile Situations. New frontiers of social policy. The World Bank, Washington DC.

Altenmüller M.S., Nuding S., Gollwitzer M. 2021. No harm in being self-corrective: self-criticism and reform intentions increase researchers’ epistemic trustworthiness and credibility in the eyes of the public. Public Understanding of Science, 30(8): 962–976.

Bailin S. 2002. Critical thinking and science education. Science & Education, 11(4): 361–375.

Baker M. 2016a. 1,500 scientists lift the lid on reproducibility. Nature, 533(7604): 452–454.

Baker M. 2016b. Stat-checking software stirs up psychology. Nature, 540(7631): 151–152.

Banobi J.A., Branch T.A., Hilborn R. 2011. Do rebuttals affect future science? Ecosphere, 2(3): 1–11.

Barnett A., Allen L., Aldcroft A., Lash T.L., McCreanor V. 2021. Examining uncertainty in journal peer reviewers’ recommendations: a cross-sectional study. Preprint.

Basil D.Z., Burton S., Soboleva A., Nesbit P. 2023. Coercive citation: understanding the problem and working toward a solution. Academy of Management Perspectives, 37(3): 205–219.

Bastian H. 2014. A stronger post-publication culture is needed for better science. PloS Medicine, 11(12): e1001772.

Bauersfeld L., Romero A., Muglikar M., Scaramuzza D. 2023. Cracking double-blind review: authorship attribution with deep learning. PLoS ONE, 18(6): e0287611.

Beall J. 2017. What I learned from predatory publishers. Biochemia Medica, 27(2): 273–278.

Berberi I., Roche D.G. 2022. No evidence that mandatory open data policies increase error correction. Nature Ecology & Evolution, 6(11): 1630–1633.

Berenbaum M.R. 2021. On zombies, struldbrugs, and other horrors of the scientific literature. Proceedings of the National Academy of Sciences, 118(32): e2111924118.

Bianchi F., Grimaldo F., Bravo G., Squazzoni F. 2018. The peer review game: an agent-based model of scientists facing resource constraints and institutional pressures. Scientometrics, 116(3): 1401–1420.

Binning S.A., Jutfelt F., Sundin J. 2018. Exorcise citations to the ‘living dead’ from the literature. Nature, 558(7709): 189.

Black S., Rappuoli R. 2010. A crisis of public confidence in vaccines. Science Translational Medicine, 2(61): 61mr1.

Blank RM. 1991. The effects of double-blind versus single-blind reviewing: experimental evidence from the American Economic Review. The American Economic Review, 81(5): 1041–1067.

Bohannon J. 2013. Who's afraid of peer review? Science, 342(6154): 60–65.

Brainard J. 2021. The $450 question: should journals pay peer reviewers? Science.

Brainard J. 2022. Silence greets requests to flag retracted studies. Nature Communications, 10, 11–12.

Bravo G., Grimaldo F., López-Iñesta E., Mehmani B., Squazzoni F. 2019. The effect of publishing peer review reports on referee behavior in five scholarly journals. Nature Communications, 10(1): 1–8.

Brewer C.A., Gross L.J. 2003. Training ecologists to think with uncertainty in mind. Ecology, 84(6): 1412–1414.

Browman H.I. 1999. Negative results. Marine Ecology Progress Series, 191: 301–309.

Bruce R., Chauvin A., Trinquart L., Ravaud P., Boutron I. 2016. Impact of interventions to improve the quality of peer review of biomedical journals: a systematic review and meta-analysis. BMC Medicine, 14(1): 85.

Bucci E., Andreev K., Björkman A., Calogero R.A., Carafoli E., Carninci, et al. 2020. Safety and efficacy of the Russian COVID-19 vaccine: more information needed. The Lancet, 396(10256): e53.

Budden A.E., Tregenza T., Aarssen L.W., Koricheva J., Leimu R., Lortie C.J. 2008. Double-blind review favours increased representation of female authors. Trends in Ecology & Evolution, 23(1): 4–6.

Burls A. 2009. What is critical appraisal? 2nd ed. Hayward Medical Communications, Newmarket, UK.

Buser J.M., Morris K.L., Dzomeku V.M., Endale T., Smith Y.R., August E. 2023. Lessons learnt from a scientific peer-review training programme designed to support research capacity and professional development in a global community. BMJ Global Health, 8(4): e012224.

Byrne J. 2019. We need to talk about systematic fraud. Nature, 566(7742): 9–10.

Callaham M.L., Schriger D.L. 2002. Effect of structured workshop training on subsequent performance of journal peer reviewers. Annals of Emergency Medicine, 40(3): 323–328.

Carlsson F., Löfgren Å., Sterner T. 2012. Discrimination in scientific review: a natural field experiment on blind versus non-blind reviews. The Scandinavian Journal of Economics, 114(2): 500–519.

Carter E.R., Onyeador I.N., Lewis N.A. Jr 2020. Developing & delivering effective anti-bias training: challenges & recommendations. Behavioral Science & Policy, 6(1): 57–70.

Cássia-Silva C., Rocha B.S., Liévano-Latorre L.F., Sobreiro M.B., Diele-Viegas LM. 2023. Overcoming the gender bias in ecology and evolution: is the double-anonymized peer review an effective pathway over time? PeerJ, 11: e15186.

Chubin D.E., Hackett E.J., Solomon S.M. 1995. Peer review and the courts, or when scientists “get real”. Accountability in Research, 4(1): 1–8.

Clark T.D., Binning S.A., Raby G.D., Speers-Roesch B., Sundin J., Jutfelt F., Roche D.G. 2016. Scientific misconduct: the elephant in the lab. A response to Parker et al. Trends in Ecology & Evolution, 31(12): 899–900.

Clausen T., Nielsen O.B. 2003. Reviewing should be shown in publication list. Nature, 421(6924): 689–689.

Clements J.C. 2020. Don't be a prig in peer review. Nature, 585(7825): 472.

Coalition for Diversity & Inclusion in Scholarly Communications (C4DISC). N.d. A focused toolkit for journal editors and publishers: building diversity, equity, inclusion, and accessibility in editorial roles and peer review Coalition for Diversity and Inclusion in Scholarly Communications [online].

Comer D.R., Schwartz M. 2014. The problem of humiliation in peer review. Ethics and Education, 9(2): 141–156.

Committee on Publication Ethics (COPE). 2011. Code of conduct and best practice guidelines for journal editors [online]. Available from https://publicationethics.org/files/Code_of_conduct_for_journal_editors_Mar11.pdf [accessed July 2024].

Committee on Publication Ethics (COPE). 2017. Ethical guidelines for peer review [online].

Committee on Publication Ethics (COPE). 2019. A short guide to ethical editing for new editors [online].

Committee on Publication Ethics(COPE). 2021. Guidelines: editing peer reviews [online]. Available from https://publicationethics.org/resources/guidelines/editing-peer-reviews [accessed July 2024].

Cooke S.J., Birnie-Gauvin K., Lennox R.J., Taylor J.J., Rytwinski T., Rummer J.L., et al. 2017. How experimental biology and ecology can support evidence-based decision-making in conservation: avoiding pitfalls and enabling application. Conservation Physiology, 5(1): cox043.

Cortegiani A., Manca A., Lalu M., Moher D. 2020. Inclusion of predatory journals in Scopus is inflating scholars’ metrics and advancing careers. International Journal of Public Health, 65(1): 3–4.

Culina A., van den Berg I., Evans S., Sánchez-Tójar A. 2020. Low availability of code in ecology: a call for urgent action. PLoS Biology, 18(7): e3000763.

Cvitanovic C., Shellock R.J., Mackay M., van Putten E.I., Karcher D.B., Dickey-Collas M., Ballesteros M. 2021. Strategies for building and managing ‘trust’ to enable knowledge exchange at the interface of environmental science and policy. Environmental Science & Policy, 123: 179–189.

De Ridder J. 2022. How to trust a scientist. Studies in History and Philosophy of Science, 93: 11–20.

De Vries D.R., Marschall E.A., Stein R.A. 2009. Exploring the peer review process: what is it, does it work, and can it be improved? Fisheries, 34(6): 270–279.

Dea S. 2020. Toward a philosophy of harm reduction. Health Care Analysis, 28(4): 302–313.

Didier E., Guaspare-Cartron C. 2018. The new watchdogs’ vision of science: a roundtable with Ivan Oransky (Retraction Watch) and Brandon Stell (PubPeer). Social Studies of Science, 48(1): 165–167.

Doctor R., Newton D.P., Pearson A. 2001. Managing uncertainty in research and development. Technovation, 21(2): 79–90.

Donnelly C.A., Boyd I., Campbell P., Craig C., Vallance P., Walport M., et al. 2018. Four principles to make evidence synthesis more useful for policy. Nature, 558: 361–364.

Durbin C.G. 2009. How to read a scientific research paper. Respiratory Care, 54(10): 1366–1371.

Else H., Van Noorden R. 2021. The fight against fake-paper factories that churn out sham science. Nature, 591(7851): 516–519.

Elsevier and Sense about Science. 2019. Quality, trust & peer review: reseachers’ perspectives 10 years on [online]. Available from https://senseaboutscience.org/wp-content/uploads/2019/09/Quality-trust-peer-review.pdf [accessed July 2024].

European Association of Science Editors (EASE). 2024. EASE toolkits for journal editors [online]. Available from https://ease.org.uk/publications/ease-toolkit-journal-editors/ [accessed July 2024].

Evans A.M., Krueger J.I. 2009. The psychology (and economics) of trust. Social and Personality Psychology Compass, 3(6): 1003–1017.

Fähnrich B. 2018. Digging deeper? Muddling through? How environmental activists make sense and use of science—an exploratory study. Journal of Science Communication, 17(3): A08.

Ferguson C., Marcus A., Oransky I. 2014. Publishing: the peer-review scam. Nature, 515: 480–482.

Ferris L.E., Brumback RA. 2010. Academic merit, promotion, and journal peer reviewing: the role of academic institutions in providing proper recognition. Journal of Child Neurology, 25(5): 538–540.

Foster A., Hindle S., Murphy K.M., Saderi D. 2021a. Open reviewers reviewer guide [online].

Foster A., Hindle S., Murphy K.M., Saderi D. 2021b. Open reviewers bias reflection guide [online].

Fox C.W., Albert A.Y.K., Vines T.H. 2017a. Recruitment of reviewers is becoming harder at some journals: a test of the influence of reviewer fatigue at six journals in ecology and evolution. Research Integrity and Peer Review, 2: 3.

Fox C.W., Burns C.S., Muncy A.D., Meyer J.A. 2017b. Author-suggested reviewers: gender differences and influences on the peer review process at an ecology journal. Functional Ecology, 31(1): 270–280.

Fox C.W., Meyer J., Aimé E. 2023. Double-blind peer review affects reviewer ratings and editor decisions at an ecology journal. Functional Ecology, 37(5): 1144–1157.

Fraser H., Parker T., Nakagawa S., Barnett A., Fidler F. 2018. Questionable research practices in ecology and evolution. PLoS ONE, 13(7): e0200303.

Frey B.S. 2003. Publishing as prostitution?–Choosing between one's own ideas and academic success. Public Choice, 13(1): 205–223.

Frickel S., Gibbon S., Howard J., Kempner J., Ottinger G., Hess DJ. 2010. Undone science: charting social movement and civil society challenges to research agenda setting. Science, Technology, & Human Values, 35(4): 444–473.

Funk C., Hefferon M., Kennedy B., Johnson C. 2019. Trust and mistrust in Americans’ views of scientific experts. Pew Research Center, 2: 1–96.

Galica J., Chee-a-tow A., Gupta S., Jaiswal A., Monsour A., Tricco A.C., et al. 2018. Learning best-practices in journalology: course description and attendee insights into the inaugural EQUATOR Canada Publication School. BMC Proceedings, 12: 18.

Galipeau J., Moher D., Campbell C., Hendry P., Cameron D.W., Palepu A., Hebert P.C. 2015. A systematic review highlights a knowledge gap regarding the effectiveness of health-related training programs in journalology. Journal of Clinical Epidemiology, 68(3): 257–265.

Garcia-Costa D., Squazzoni F., Mehmani B., Grimaldo F. 2022. Measuring the developmental function of peer review: a multi-dimensional, cross-disciplinary analysis of peer review reports from 740 academic journals. PeerJ, 10: e13539.

Gerwing T.G., Gerwing A.M.A., Avery-Gomm S., Choi C.-Y., Clements J.C., Rash J.A. 2020. Quantifying professionalism in peer review. Research Integrity and Peer Review, 5(1): 1–8.

Gerwing T.G., Gerwing A.M.A., Choi C.-Y., Avery-Gomm S., Clements J.C., Rash J.A. 2021. Re-evaluation of solutions to the problem of unprofessionalism in peer review. Research Integrity and Peer Review, 6(1): 1–5.

Gipp B., Breitinger C., Meuschke N., Beel J. 2017. CryptSubmit: introducing securely timestamped manuscript submission and peer review feedback using the blockchain. In Proceedings of the ACM/IEEE-CS Joint Conference on Digital Libraries (JCDL), Toronto, Canada.

Gough D., Oliver S., Thomas J. 2017. An introduction to systematic reviews. Sage Publications Ltd. 352 p.

Government of Canada. 2018. Tri-Council Policy Statement—Chapter 11: Clinical Trials [online]. Available from https://ethics.gc.ca/eng/tcps2-eptc2_2018_chapter11-chapitre11.html [accessed July 2024].

Grudniewicz A., Moher D., Cobey K.D., Bryson G.L., Cukier S., Allen K., Ardern C., et al. 2019. Predatory journals: no definition, no defence. Nature, 576(7786): 210–212.

Guttinger S., Love A.C. 2019. Characterizing scientific failure: putting the replication crisis in context. EMBO Reports, 20(9): e48765.

Haffar S., Bazerbachi F., Murad M.H. 2019. Peer review bias: a critical review. Mayo Clinic Proceedings, 94(4): 670–676.

Hamilton L.C., Hartter J., Saito K. 2015. Trust in scientists on climate change and vaccines. Sage Open, 5(3): 1–13.

Hanson B., Lawrence R., Meadows A., Paglione L. 2016. Early adopters of ORCID functionality enabling recognition of peer review: two brief case studies. Learned Publishing, 29(1): 60–63.

Hanson M.A., Barreiro P.G., Crosetto P., Brockington D. 2023. The strain on scientific publishing. arXiv preprint arXiv:2309.15884.

Happe L.E. 2020. Distinguishing predatory from reputable publishing practices. Journal of Managed Care & Specialty Pharmacy, 26(8): 956–960.

Haug C.J. 2015. Peer-review fraud—hacking the scientific publication process. New England Journal of Medicine, 373(25): 2393–2395.

Hawk M., Coulter R.W., Egan J.E., Fisk S., Reuel Friedman M., Tula M., Kinsky S. 2017. Harm reduction principles for healthcare settings. Harm Reduction Journal, 14(1): 70.

Helmer M., Schottdorf M., Neef A., Battaglia D. 2017. Gender bias in scholarly peer review. Elife, 6: e21718.

Hendriks F., Kienhues D., Bromme R. 2016. Trust in science and the science of trust. In Trust and communication in a digitized world. Edited by B. Blöbaum. Progress in IS. Springer, Cham. pp. 143–159.

Hojat M., Gonnella J.S., Caelleigh A.S. 2003. Impartial judgment by the “gatekeepers” of science: fallibility and accountability in the peer review process. Advances in Health Sciences Education, 8: 75–96.

Horbach S.P., Halffman W. 2019. The ability of different peer review procedures to flag problematic publications. Scientometrics, 118(1): 339–373.

Hosseini M., Resnik D.B. 2024. Guidance needed for using artificial intelligence to screen journal submissions for misconduct. Research Ethics, 00: 000–000.

Huber B., Barnidge M., Gil de Zúñiga H., Liu J. 2019. Fostering public trust in science: the role of social media. Public Understanding of Science, 28(7): 759–777.

Huber J., Inoua S., Kerschbamer R., König-Kersting C., Palan S., Smith V.L. 2022. Nobel and novice: author prominence affects peer review. Proceedings of the National Academy of Sciences, 119(41): e2205779119.

Hudson J. 2006. Institutional trust and subjective well-being across the EU. Kyklos, 59(1): 43–62.

Jefferson T., Alderson P., Wager E., Davidoff F. 2002. Effects of editorial peer review: a systematic review. Jama, 287(21): 2784–2786.

Kempner J., Merz J.F., Bosk C.L. 2011. Forbidden knowledge: public controversy and the production of nonknowledge. Sociological Forum, 26(3): 475–500.

Kharasch E.D., Avram M.J., Clark J.D., Davidson A.J., Houle T.T., Levy J.H., et al. 2021. Peer review matters: research quality and the public trust. Anesthesiology, 134(1): 1–6.

Knoepfler P. 2015. Reviewing post-publication peer review. Trends in Genetics, 31(5): 221–223.

Kovanis M., Porcher R., Ravaud P., Trinquart L. 2016. The global burden of journal peer review in the biomedical literature: strong imbalance in the collective enterprise. PLoS ONE, 11(11): e0166387.

Kratz J.E., Strasser C. 2015. Researcher perspectives on publication and peer review of data. PLoS ONE, 10(2): e0117619.

Kreps S.E., Kriner D.L. 2020. Model uncertainty, political contestation, and public trust in science: Evidence from the COVID-19 pandemic. Science Advances, 6(43): eabd4563.

Lacey J., Howden M., Cvitanovic C., Colvin R.M. 2018. Understanding and managing trust at the climate science–policy interface. Nature Climate Change, 8(1): 22–28.

Laxdal A., Haugen T. 2024. Where are the carrots? A proposal to start crediting peer reviewers for their contribution to science. Learned Publishing, 37(2): 154–156.

Ledford H., Van Noorden R. 2020. High-profile coronavirus retractions raise concerns about data oversight. Nature, 582(7811): 160.

Lee C.J., Moher D. 2017. Promote scientific integrity via journal peer review data. Science, 357(6348): 256–257.

Lee C.J., Sugimoto C.R., Zhang G., Cronin B. 2013. Bias in peer review. Journal of the American Society for Information Science and Technology, 64(1): 2–17.

Lenton S., Single E. 1998. The definition of harm reduction. Drug and Alcohol Review, 17(2): 213–220.

Levinson R. 2006. Towards a theoretical framework for teaching controversial socio-scientific issues. International Journal of Science Education, 28(10): 1201–1224.

Lewicki R.J., Brinsfield C. 2017. Trust repair. Annual Review of Organizational Psychology and Organizational Behavior, 4: 287–313.

Lewis J.D., Weigert A. 1985. Trust as a social reality. Social Forces, 63(4): 967–985.

Lortie C.J. 2011. Money for nothing and your referees for free. Ideas in Ecology and Evolution, 4. Available from https://ojs.library.queensu.ca/index.php/IEE/article/view/4234 [accessed August 2024].

Loseto L., Breton-Honeyman K., Etiendem D., Johnson N., Pearce T., Allen J., et al. 2020. Indigenous participation in peer review publications and the editorial process: reflections from a workshop. Arctic Science, 6(3): 352–360.

Lyons-Warren A.M., Aamodt W.W., Pieper K.M., Strowd R.E. 2024. A structured, journal-led peer-review mentoring program enhances peer review training. Research Integrity and Peer Review, 9(1): 3.

Malnes R. 2006. Imperfect science. Global Environmental Politics, 6(3): 58–71.

Marlatt G.A. 1996. Harm reduction: come as you are. Addictive behaviors, 21(6): 779–788.

McKnight D.H., Chervany N.L. 2001. Trust and distrust definitions: one bite at a time. In Trust in cyber-societies. Vol. 2246. Edited by R. Falcone, M. Singh, Y.H. Tan. Lecture Notes in Computer Science, Springer, Berlin, Heidelberg. pp. 27–54.

McLeod S. 2021. Should authors cite sources suggested by peer reviewers? Six antidotes for handling potentially coercive reviewer citation suggestions. Learned Publishing, 34: 282–286.

McNutt R.A., Evans A.T., Fletcher R.H., Fletcher SW. 1990. The effects of blinding on the quality of peer review: a randomized trial. Jama, 263(10): 1371–1376.

Mehmani B. 2019. Two heads are better than one: working with a co-reviewer [online]. Available from https://www.elsevier.com/connect/two-heads-are-better-than-one-working-with-a-co-reviewer [accessed July 2024].

Mills D., Inouye K. 2021. Problematizing ‘predatory publishing’: a systematic review of factors shaping publishing motives, decisions, and experiences. Learned Publishing, 34(2): 89–104.

Millstone E., Van Zwanenberg P. 2000. A crisis of trust: for science, scientists or for institutions? Nature Medicine, 6(12): 1307–1308.

Moher D., Galipeau J., Alam S., Barbour V., Bartolomeos K., Baskin P., et al. 2017a. Core competencies for scientific editors of biomedical journals: consensus statement. BMC Medicine, 15(1): 167.

Moher D., Shamseer L., Cobey K.D., Lalu M.M., Galipeau J., Avey M.T., et al. 2017b. Stop this waste of people, animals and money. Nature, 549(7670): 23–25.

National Institutes of Health. 2021. 4.1.3 Clinical Trials Registration and Reporting in ClinicalTrials.gov Requirement [online]. Available from https://grants.nih.gov/grants/policy/nihgps/HTML5/section_4/4.1.3_clinical_trials_registration_and_reporting_in_clinicaltrials.gov_requirement.htm#:~:text=All%20NIH-funded%20clinical%20trials%20will%20be%20expected%20to,not%20receive%20NIH%20funds%20to%20support%20its%20conduct [accessed July 2024].

Neff B.D., Olden J.D. 2006. Is peer review a game of chance? Bioscience, 56(4): 333–340.

Noonan B.M., Parrish D. 2008. Expressions of concern and their uses. Learned Publishing, 21(3): 209–213.

Nosek B.A., Ebersole C.R., DeHaven A.C., Mellor DT. 2018. The preregistration revolution. Proceedings of the National Academy of Sciences, 115(11): 2600–2606.

Oliver K., Innvar S., Lorenc T., Woodman J., Thomas J. 2014. A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Services Research, 14: 1–12.

Olson J. 2008. More than a human endeavor. Science and Children, 45(6): 43.

Open Science Collaboration. 2015. Estimating the reproducibility of psychological science. Science, 349(6251): aac4716.

Ophir Y., Jamieson K.H. 2021. The effects of media narratives about failures and discoveries in science on beliefs about and support for science. Public Understanding of Science, 30(8): 1008–1023.

Ortega J.L. 2021. The relationship and incidence of three editorial notices in PubPeer: errata, expressions of concern, and retractions. Learned Publishing, 34(2): 164–174.

Park Y., West R.A., Pathmendra P., Favier B., Stoeger T., Capes-Davis A., et al. 2021. Human gene function publications that describe wrongly identified nucleotide sequence reagents are unacceptably frequent within the genetics literature. Life Science Alliance, 5(4): e202101203.

Parmanne P., Laajava J., Järvinen N., Harju T., Marttunen M., Saloheimo P. 2023. Peer reviewers' willingness to review, their recommendations and quality of reviews after the Finnish Medical Journal switched from single-blind to double-blind peer review. Research Integrity and Peer Review, 8(1): 14.

Parsons C.E., Baglini R.B. 2021. Peer review: the case for neutral language. Trends in Cognitive Sciences, 25(8): 639–641.

Pennisi E. 2021. Star ecologist put on leave after university probe ends. Science, 374(6571): 1035–1036.

Peres-Neto P.R. 2016. Will technology trample peer review in ecology? Ongoing issues and potential solutions. Oikos, 125(1): 3–9.

Polka J.K., Kiley R., Konforti B., Stern B., Vale RD. 2018. Publish peer reviews. Nature, 560: 545–547.

Price S., Flach PA. 2017. Computational support for academic peer review: a perspective from artificial intelligence. Communications of the ACM, 60(3): 70–79.

Proctor D.M., Dada N., Serquiña A., Willett J.L. 2023. Problems with peer review shine a light on gaps in scientific training. MBio, 14(3): e03183–e03122.

Rastogi C., Stelmakh I., Shen X., Meila M., Echenique F., Chawla S., Shah N.B. 2022. To ArXiv or not to ArXiv: a study quantifying pros and cons of posting preprints online. Preprint. arXiv:2203.17259.

Rennie D. 2003. Misconduct and journal peer review. In Peer Review In Health Sciences. 2nd ed. Edited by F. Godlee, T. Jefferson. BMJ Books, London. pp. 118–129.

Rennie D. 2016. Let's make peer review scientific. Nature, 535(7610): 31–33.

Resnik D.B. 2011. A troubled tradition. American Scientist, 99(1): 24–28.

Resnik D.B., Elmore S.A. 2016. Ensuring the quality, fairness, and integrity of journal peer review: a possible role of editors. Science and Engineering Ethics, 22: 169–188.

Rice D.B., Pham B., Presseau J., Tricco A.C., Moher D. 2022. Characteristics of ‘mega’ peer-reviewers. Research Integrity and Peer Review, 7: 1.

Rodríguez-Bravo B., Nicholas D., Herman E., Boukacem-Zeghmouri C., Watkinson A., Xu J., et al. 2017. Peer review: the experience and views of early career researchers. Learned Publishing, 30(4): 269–277.

Roe G. 2005. Harm reduction as paradigm: is better than bad good enough? The origins of harm reduction. Critical Public Health, 15(3): 243–250.

Ross-Hellauer R., Horbach S. 2024. Lack of experimentation has stalled the debate on open peer review [online]. Available from https://blogs.lse.ac.uk/impactofsocialsciences/2024/03/21/lack-of-experimentation-has-stalled-the-debate-on-open-peer-review/ [accessed July 2024].

Ross-Hellauer T. 2017. What is open peer review? A systematic review. F1000Research, 6: 588.

Ross-Hellauer T., Bouter L.M., Horbach S.P. 2023. Open peer review urgently requires evidence: a call to action. PLoS Biology, 21(10): e3002255.

Rousseau D.M., Sitkin S.B., Burt R.S., Camerer C. 1998. Not so different after all: a cross-discipline view of trust. Academy of Management Review, 23(3): 393–404.

Rowland F. 2002. The peer-review process. Learned Publishing, 15(4): 247–258.

Rutjens B.T., van der Linden S., van der Lee R. 2021. Science skepticism in times of COVID-19. Group Processes & Intergroup Relations, 24(2): 276–283.

Sabel B.A., Knaack E., Gigerenzer G., Bilc M. 2023. Fake publications in biomedical science: red-flagging method Indicates mass production. Preprint.

Schroter S., Black N., Evans S., Carpenter J., Godlee F., Smith R. 2004. Effects of training on quality of peer review: randomised controlled trial. Bmj, 328(7441): 673.

Schroter S., Black N., Evans S., Godlee F., Osorio L., Smith R. 2008. What errors do peer reviewers detect, and does training improve their ability to detect them? Journal of the Royal Society of Medicine, 101(10): 507–514.

Schulz R., Barnett A., Bernard R., Brown N.J., Byrne J.A., Eckmann P., et al. 2022. Is the future of peer review automated? BMC Research Notes, 15(1): 203.

Shibayama S., Baba Y. 2015. Dishonest conformity in peer review. Prometheus, 33(3): 215–233.

Silbiger N.J., Stubler A.D. 2019. Unprofessional peer reviews disproportionately harm underrepresented groups in STEM. PeerJ, 7: e8247.

Simkin M.V., Roychowdhury V.P. 2003. Read before you cite! Preprint. Available from https://arxiv.org/ftp/cond-mat/papers/0212/0212043.pdf [accessed July 2024].

Sipior JC. 2018. Peer reviewer non-performance: the need to replenish the “Commons.” Communications of the Association for Information Systems, 42(1): 27.

Sismondo S. 2017. Post-truth? SAGE Publications, London, England.

Skórzewska-Amberg M. 2022. Publications in scientific journals—the new face of deception. Krytyka Prawa, 14(4): 20–28.

Smith R. 2006. Peer review: a flawed process at the heart of science and journals. Journal of the Royal Society of Medicine, 99(4): 178–182.

Smith R. 2010. Classical peer review: an empty gun. Breast Cancer Research, 12(4): 1–4.

Spier R. 2002. The history of the peer-review process. TRENDS in Biotechnology, 20(8): 357–358.

Squazzoni F., Ahrweiler P., Barros T., Bianchi F., Birukou A., Blom H.J.J., et al. 2020. Unlock ways to share data on peer review. Nature, 578: 512–514.

Squazzoni F., Bravo G., Takács K. 2013. Does incentive provision increase the quality of peer review? An experimental study. Research Policy, 42(1): 287–294.

Squazzoni F., Bravo G., Grimaldo F., García-Costa D., Farjam M., Mehmani B. 2021. Gender gap in journal submissions and peer review during the first wave of the COVID-19 pandemic. A study on 2329 Elsevier journals. PLoS ONE, 16(10): e0257919.

Stafford T. 2018. Reviews, reviewers, and reviewing: the “tragedy of the commons” in the scientific publication process. Communications of the Association for Information Systems, 42(1): 25.

Stephan P. 2012. How economics shapes science. Harvard University Press, Cambridge, MA.

Stephen D. 2024. Distinguishing articles in questionable and non-questionable journals using quantitative indicators associated with quality. Preprint.

Strauss D., Gran-Ruaz S., Osman M., Williams M.T., Faber S.C. 2023. Racism and censorship in the editorial and peer review process. Frontiers in Psychology, 14: 1120938.

Strayhorn J. Jr, McDermott J. Jr, Tanguay P. 1993. An intervention to improve the reliability of manuscript reviews for the Journal of the American Academy of Child and Adolescent Psychiatry. The American Journal of Psychiatry, 150(6):947–952.

Strielkowski W. 2018. Predatory publishing: what are the alternatives to Beall's list? The American Journal of Medicine, 131(4): 333–334.

Subramanyam R. 2013. Art of reading a journal article: methodically and effectively. Journal of Oral and Maxillofacial Pathology, 17(1): 65.

Sumpter J.P., Runnallis T.J., Johnson A.C., Barcelo D. 2023. A ‘Limitations’ section should be mandatory in all scientific papers. Science of the Total Environment, 857(2): 159395.

Sun M., Barry Danfa J., Teplitskiy M. 2022. Does double-blind peer review reduce bias? Evidence from a top computer science conference. Journal of the Association for Information Science and Technology, 73(6): 811–819.

Sun Z., Pang K.L., Li Y. 2024. The fading of status bias during the open peer review process. Journal of Informetrics, 18(3): 101528.

Teixeira da Silva J.A., Dobránszki J. 2017. Notices and policies for retractions, expressions of concern, errata and corrigenda: their importance, content, and context. Science and Engineering Ethics, 23(2): 521–554.

Teixeira da Silva J.A., Yamada Y. 2021. An extended state of uncertainty: a snap-shot of expressions of concern in neuroscience. Current Research in Behavioral Sciences, 2: 100045.

Tennant J.P. 2018. The state of the art in peer review. FEMS Microbiology Letters, 365(19): fny204.

Tennant J.P., Ross-Hellauer T. 2020. The limitations to our understanding of peer review. Research Integrity and Peer Review, 5(1): 1–14.

Tennant J.P., Dugan J.M., Graziotin D., Jacques D.C., Waldner F., Mietchen D., et al. 2017. A multi-disciplinary perspective on emergent and future innovations in peer review [version 3; peer review: 2 approved]. F1000Research, 6: 1151.

Todd P.A., Guest J.R., Lu J., Chou L.M. 2010. One in four citations in marine biology papers is inappropriate. Marine Ecology Progress Series, 408: 299–303.

Tollefson J. 2020. How Trump damaged science—and why it could take decades to recover. Nature, 586(7828): 190–194.

Tomkins A., Zhang M., Heavlin W.D. 2017. Reviewer bias in single-versus double-blind peer review. Proceedings of the National Academy of Sciences, 114(48): 12708–12713.

Torny D. 2018. Pubpeer: vigilante science, journal club or alarm raiser? The controversies over anonymity in post-publication peer review. In PEERE International Conference on Peer Review. PEERE COST Network, March 2018, Rome, Italy.

Townsend F. 2013. Post-publication peer review: PubPeer. Editors' Bulletin, 9(3): 45–46.

Tsang E.W., Frey B.S. 2007. The as-is journal review process: let authors own their ideas. Academy of Management Learning & Education, 6(1): 128–136.

United2Act. 2024. Against paper mills [online]. Available from https://united2act.org/ [accessed July 2024].

Van Noorden R. 2014a. Publishers withdraw more than 120 gibberish papers. Nature.

Van Noorden R. 2014b. The scientists who get credit for peer review. Nature.

Van Rooyen S., Godlee F., Evans S., Black N., Smith R. 1999. Effect of open peer review on quality of reviews and on reviewers' recommendations: a randomised trial. Bmj, 318(7175): 23–27.

Van Schalkwyk M.C., Hird T.R., Maani N., Petticrew M., Gilmore A.B. 2020. The perils of preprints. Bmj, 370: m311.

Vaught M., Jordan D.C., Bastian H. 2017. Concern noted: a descriptive study of editorial expressions of concern in PubMed and PubMed Central. Research Integrity and Peer Review, 2(1): 1–11.

Vernon J.L. 2017. Science in the post-truth era. American Scientist, 105(1): 2–3.

Viglione G. 2020. ‘Avalanche’ of spider-paper retractions shakes behavioural-ecology community. Nature, 578(7794): 199–200.

Wakeling S., Willett P., Creaser C., Fry J., Pinfield S., Spezi V. 2017. Transitioning from a conventional to a “mega'journal: a bibliometric case study of the journal medicine. Publications, 5(2): 7.

Wakeling S., Willett P., Creaser C., Fry J., Pinfield S., Spezi V., et al. 2020. ‘No comment’? A study of commenting on PLOS articles. Journal of Information Science, 46(1): 82–100.

Walsh E., Rooney M., Appleby L., Wilkinson G. 2000. Open peer review: a randomised controlled trial. British Journal of Psychiatry, 176(1): 47–51.

Wang W., Kong X., Zhang J., Chen Z., Xia F., Wang X. 2016. Editorial behaviors in peer review. SpringerPlus, 5(1): 903–903.

Ward S.M. 2016. The rise of predatory publishing: How to avoid being scammed. Weed Science, 64(4): 772–778.

Ware M. 2005. Online submission and peer-review systems. Learned publishing, 18(4): 245–250.

Ware M., Mabe M. 2015. The STM report: an overview of scientific and scholarly journal publishing. International Association of Scientific, Technical and Medical Publishers. The Hauge, Netherlands. 180p. Available from http://digitalcommons.unl.edu/scholcom/9 [accessed July 2024].

Warren M. 2018. Trust and democracy. In The Oxford Handbook of Social and Political Trust. Edited by E.M. Uslaner. Oxford University Press, New York. pp. 75–94.

Weaver W. 1961. The imperfections of science. American Scientist, 49(1): 99–113.

Webb T.J., O'Hara B., Freckleton R.P. 2008. Does double-blind review benefit female authors? Trends in Ecology & Evolution, 23(7): 351–353.

Welch M.R., Rivera R.E., Conway B.P., Yonkoski J., Lupton P.M., Giancola R. 2005. Determinants and consequences of social trust. Sociological Inquiry, 75(4): 453–473.

Weller A.C. 2001. Editorial peer review: Its strengths and weaknesses. Asist Monograph Services. Information Today, Inc., Medford, NJ.

Wight C. 2018. Post-truth, postmodernism and alternative facts. New Perspectives, 26(3): 17–29.

Wilhite A.W., Fong E.A. 2012. Coercive citation in academic publishing. Science, 335(6068): 542–543.

Wilkie T. 1996. Sources in science: who can we trust? The Lancet, 347(9011): 1308–1311.

Willis J.V., Cobey K.D., Ramos J., Chow R., Ng J.Y., Alayche M., Moher D. 2022. Online training in manuscript peer review: a systematic review. Preprint.

Willis M. 2016. Why do peer reviewers decline to review manuscripts? A study of reviewer invitation responses. Learned Publishing, 29(1): 5–7.

Young N., Dugas E. 2012. Comparing climate change coverage in Canadian English and French-language print media: environmental values, media cultures, and the narration of global warming. Canadian Journal of Sociology, 37(1): 25–54.

Zheng X., Chen J., Tollas A., Ni C. 2023. The effectiveness of peer review in identifying issues leading to retractions. Journal of Informetrics, 17(3): 101423.

Information & Authors

Information

Published In

FACETS

Volume 9 • Number 1 • January 2024

Pages: 1 - 14

Editor: Paul Dufour

History

Received: 28 May 2024

Accepted: 26 August 2024

Version of record online: 18 September 2024

Copyright

© 2024 Copyright remains with the author(s) or their institution(s). This work is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Data Availability Statement

This is a perspective article so there are no data available to share.

Key Words

Sections

Subjects

Authors

Author Contributions

Conceptualization: SJC, NY, JC, RR, RL, HIB

Funding acquisition: SJC

Project administration: SJC, KP, AK

Visualization: SJC