Earth is undergoing a steady decline of biodiversity, habitat, and natural resources as humanity transforms natural capital into consumer products, urban and agricultural land, and industrial and domestic infrastructure (

Postel et al. 1996;

Watson et al. 2018;

Richardson et al. 2023). Most developed nations have implemented some form of environmental regulation to minimize ecosystem degradation and associated loss of natural capital (defined broadly here as the abundance of listed and nonlisted species, habitats, and their associated ecological functions;

Guerry et al. 2015). These protections are often in the form of environmental impact assessments (EIA) for screening the effects of large development projects, and general environmental regulations (e.g., water quality and riparian protection standards) intended to protect natural capital from the cumulative impacts of routine smaller-scale development. In addition to domestic environmental legislation, many nations have also adopted post-2020 Global Biodiversity Framework targets (e.g., 30% protected area and 30% restoration of degraded ecosystems by 2030) to address ongoing declines in biodiversity, natural habitat, and ecosystem services (

Díaz et al. 2020;

Xu et al. 2021;

Convention on Biological Diversity 2022). In principle, this establishes both a floor on the trajectory of decline of natural capital (30% minimum) as well as a threshold target for recovery (60% total, depending on the spatial overlap between protection and restoration).

The decline of natural capital is often exacerbated by ratchet effects (

Birkeland 2004;

Perkin et al. 2015), which are here broadly defined as policy or process that inadvertently facilitates depletion of natural capital (and often obstructs its’ recovery). Ratchet effects exert constant downward pressure and in severe cases may induce feedback loops that amplify pressure over time. A classic example of an economic ratchet is over-investment in fishing fleets during cyclic peaks in stock abundance, followed by overfishing in subsequent periods of low stock productivity (

Caddy and Gulland 1983;

Ludwig et al. 1993); economic hardship then results in further pressure to exploit dwindling stocks. Regulatory ratchets arise when environmental protection is ineffective or has a significant failure rate (e.g.,

Quigley and Harper 2006), effectively normalizing environmental degradation despite the appearance of protection, thereby contributing to a trajectory of decline (

Quigley and Harper 2006). Although many hidden processes in economic and governance systems contribute to downward pressures on natural capital, science is generally assumed to play a neutral role. However, traditional applications of science to environmental regulation and impact assessment share some of the attributes of a ratchet, and may be inadvertently contributing to the rapid loss of natural capital. Although this issue has been described elsewhere (e.g.,

Peterman 1990;

Mapstone 1995;

Kriebel et al. 2001) it remains poorly reported, and implications for managing cumulative effects and global land degradation are under-appreciated in the science community. The objective of this paper is to consider how application of a traditional hypothesis testing framework to EIA and regulation may contribute to a ratchet effect generating downward pressure on natural capital. Although there are many potential statistical and social sources of bias in EIA (e.g., intrusion of subjectivity and conflict of interest:

Murray et al. 2018;

Singh et al. 2019; impact monitoring duration that is too short to detect lagged effects:

Singh et al. 2019;

White 2019; political interference:

Bragagnolo et al. 2017;

Enríquez-de-Salamanca 2018;

Bond et al. 2020), the focus here is on (i) ratchet effects associated with transposing conventional treatment of Type 1 and Type 2 errors to environmental assessment and regulation; (ii) ratchet effects associated with routine environmental regulation outside of EIA; and (iii) the governance structures required to correct these biases, as described below.

Cryptic biases associated with the statistical treatment of uncertainty

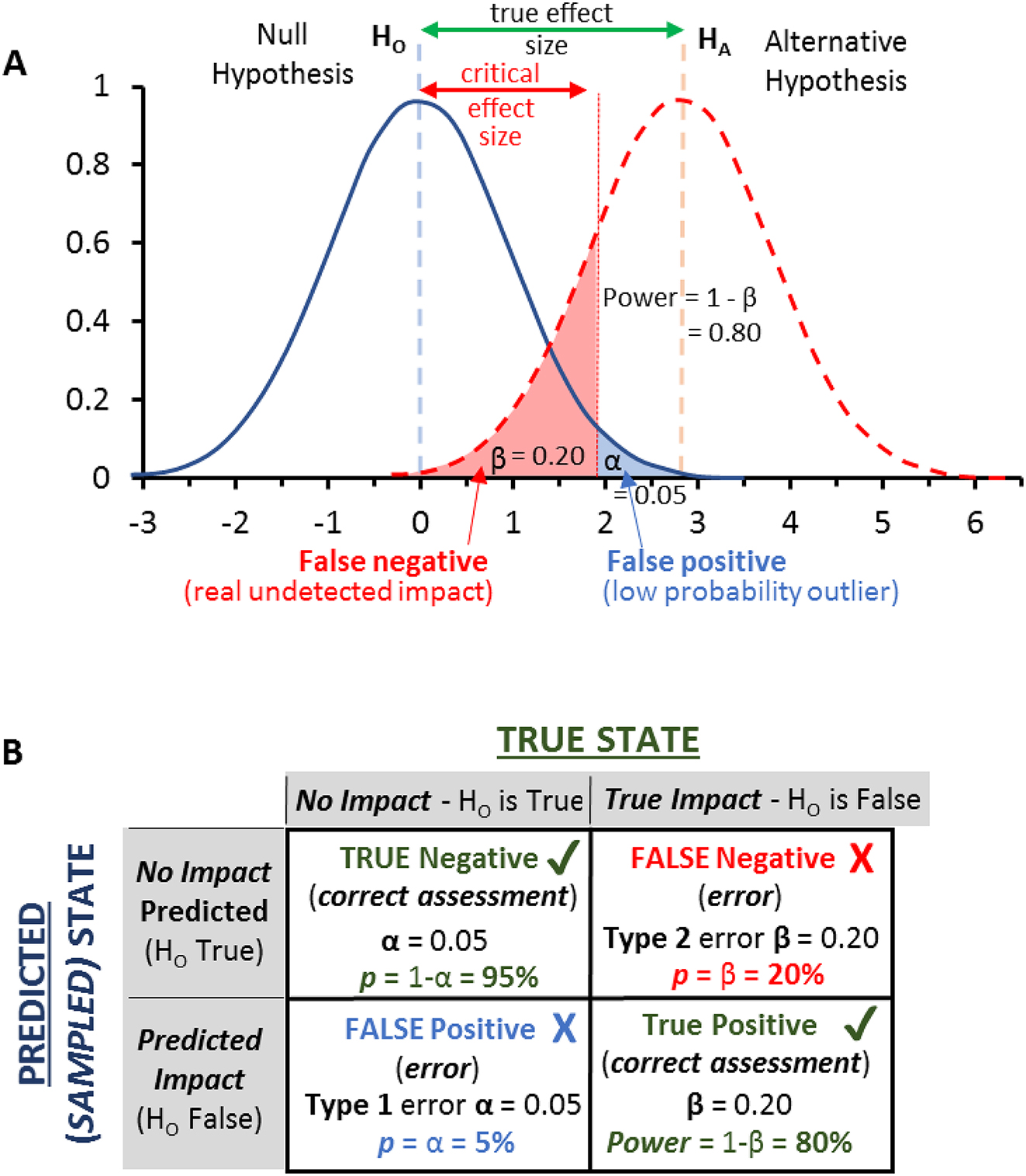

Conventional research usually involves testing the null hypothesis of no difference between treatment and control versus the alternative hypothesis of a significant treatment effect. This entails two sources of error: Type 1 error (α), which represents the probability of a false positive (i.e., falsely concluding that the treatment differs from the control when the observation is simply an outlier;

Fig. 1A); and Type 2 error (β) which is the probability of a false negative, i.e., falsely concluding that treatment and control are identical—no impact—when in fact there is a difference, but the analysis lacks sensitivity to detect the effect (

Fig. 1;

Biau et al. 2010). 1-β represents statistical power, i.e., the power of an experimental or monitoring design to detect a treatment effect (

Fig. 1A;

Peterman 1990).

In traditional research, the primary goal is to detect or demonstrate a treatment effect, and a significant effect is generally the trigger for scientific progress; failure to detect a treatment effect may stimulate more research with increased replication and better error control, but absence of a treatment effect usually indicates a dead end for any particular research hypothesis (

Mapstone 1995). By convention, the Type 1 error α is generally set at

α = 0.05 (i.e., a 5% probability of falsely concluding that there is a treatment effect). There is also a trade-off between Type 1 and Type 2 errors (

Fig. 1A); a decrease in

α (e.g., from 0.05 to 0.01) right-shifts the significance threshold and decreases the likelihood of a Type 1 error, but increases the likelihood of a Type 2 error.

While scientists try to design experiments with adequate power to detect treatment effects, the likelihood of a Type 2 error is usually not fixed, but varies depending on statistical design; however, by convention the default target power is often set at an 80% probability of detecting a treatment effect (

β = 0.2) implying that the true effect will go undetected 20% of the time (

Fig. 1B;

Cohen 1988;

Biau et al. 2010). This asymmetry (5% error rate for false positives, 20% for false negatives) reflects the premium placed on avoiding a mistaken conclusion that a treatment effect exists when it does not (i.e., a false positive), since this could cause significant investment in a nonsensical research trajectory and potentially lead to harm if false results are applied, for instance in a medical setting (

Shrader-Freshette and McCoy 1992). On the other hand, failing to detect a true treatment effect (false negative or Type 2 error), while a potential lost opportunity cost to basic science, is less likely to lead to active harm. In effect, the implicit trade-off between Type 1 (α = 0.05) and Type 2 error (β = 0.2) in traditional science places a premium on minimizing false positives (falsely concluding that treatment effects exist) over false negatives (falsely concluding that treatment effects are absent), and is precautionary towards confidence in inferring true treatment effects over their absence (

Peterman 1990;

Shrader-Freshette and McCoy 1992;

Mapstone 1995;

Kriebel et al. 2001).

In contrast, the causality of action based on hypothesis testing is reversed in the field of EIA; the absence of a treatment effect (no predicted environmental impact) is what triggers action, in the form of economic development. A false positive (Type 1 error indicating that development will cause impact when this is false) typically has a fixed probability

α = 0.05, effectively making the assessment process precautionary towards development interests (low likelihood of lost development opportunities). In contrast, power to detect an impact (false negative) is generally lower, and varies across assessments depending on replication, the threshold effect size for detection of impact, and other aspects of monitoring and experimental design (

Andrew and Mapstone 1988;

Peterman 1990;

Mapstone 1995), but the default target power is often 80% (

Cohen 1988). In this sense, the reverse causality of action between traditional research hypotheses (insignificant treatment effect results in no action) and development under EIA (insignificant treatment effect triggers development) makes low power differentially protective of development interests (

Mapstone 1995). In other words, a fixed

α = 0.05 and a variable but generally higher

β means that the likelihood of mistakenly authorizing a significant environmental impact is usually higher than the likelihood of mistakenly preventing development.

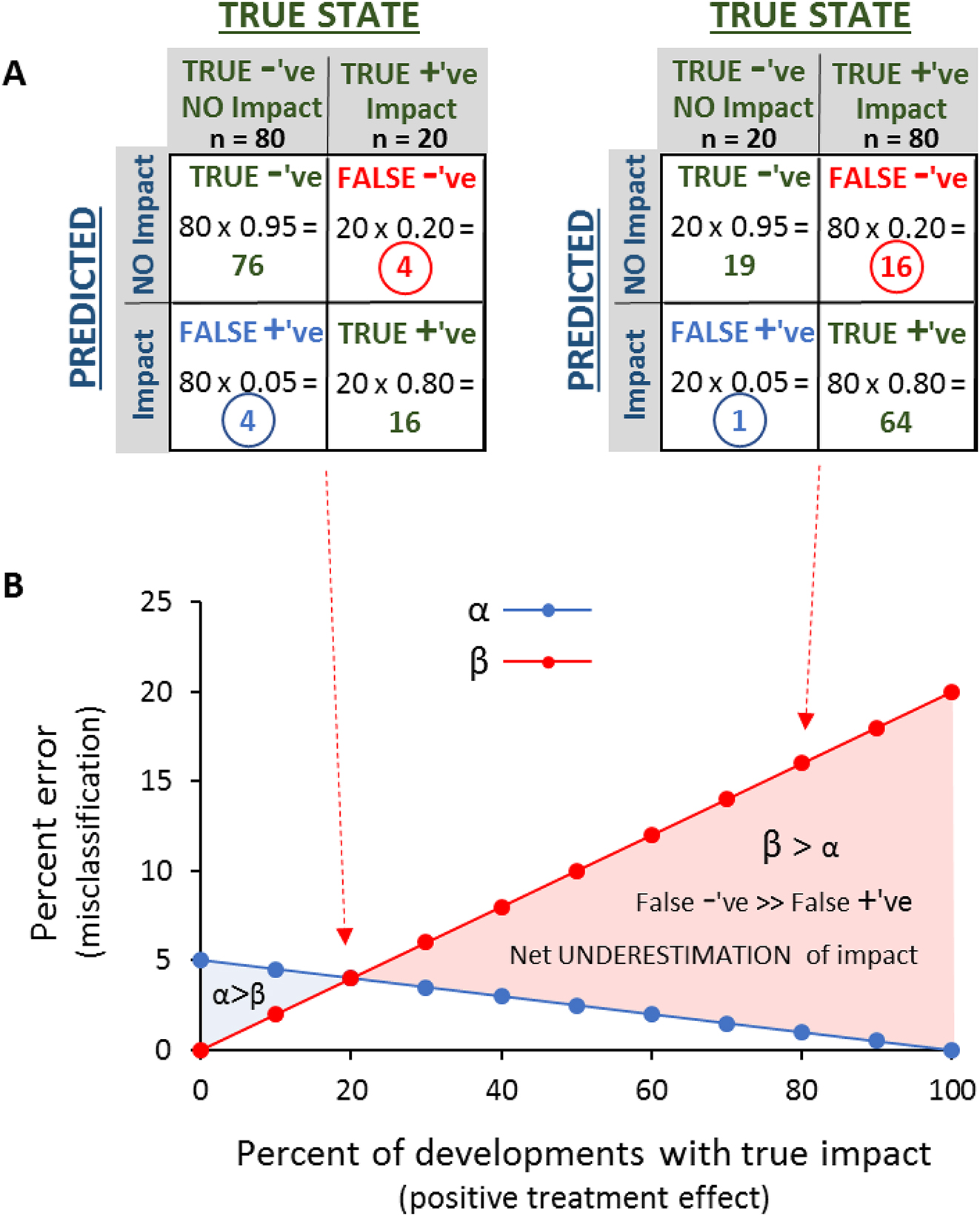

This asymmetry in predicted risk is very sensitive to the true frequency of development impact (

Biau et al. 2010), as illustrated in

Fig. 2. For example, if the true frequency of impact is 80% for a hypothetical population of 100 proposed developments, then 80 out of 100 will experience impacts exceeding a specified critical effect size, and 20 will remain unaffected (

Fig. 2A, right table). If

α = 0.05, then of the 20 unimpacted sites, 20 × 0.05 = 1 will be misclassified as impacted (false positive, hypothetically causing lost development opportunities). If

β = 0.2, then 80 × 0.2 = 16 of the impacted sites will be misclassified as unimpacted when in fact they would be impacted by development (false negatives, hypothetically leading to environmental harm). In this case of an 80% true frequency of impact vulnerability, false negatives (16%) greatly exceed false positives (1%), meaning that the assessment process is far more precautionary to development interests than the environment (15% net underestimation of impacted sites;

Fig. 2B; red shaded area in

Fig. 1A). In contrast, precaution towards environmental impacts only emerges at low frequency rates of true impact (

Fig. 2). If the true frequency of realized impact from a hypothetical development is 20% (see calculations in

Fig. 2A left table), then the frequency of false negatives is equal to the frequency of false positives (

Fig. 1D), and the process is equally precautionary to both economic and environmental interests (4% misclassification rate for both;

Fig. 2). At higher frequencies of true impact there is a clear bias in favour of underestimating development effects, driven by the low power to detect them (default 80% power,

β = 0.2).

Mapstone (1995) discusses the balance between Type 1 and Type 2 error rates, how it affects development versus environmental protection interests, and describes a process for covarying α and β thresholds to achieve equal weighting of uncertainty (α = β) and therefore economic and environmental consequences. While this more nuanced approach has merit and experienced EIA practitioners may use it, traditional assessment with α fixed at 0.05 remains the general default.

This example presents a simplified picture of the overall environmental assessment process, which often involves additional iterative steps to minimize risk beyond what is considered here; nevertheless, it captures a very real bias in the underlying statistical logic. In principle, the potential for bias will apply to assessment of impacts during post-assessment monitoring, as well as the initial screening and assessment of whether a development should proceed (assuming the decision involves inferential statistics).

EIA is a complex process generally applied to very large developments, with impacts that are often unique to each project. In contrast, the many routine impacts of most small developments are regulated with general environmental standards (e.g., fixed water quality thresholds or riparian buffer widths). Fixed regulatory standards have the advantage of eliminating the need to assess uncertainty around impacts on a site-by-site basis; however, the degree to which standards meet or fall short of a reference (unimpacted) condition is effectively built into the regulation itself, and many regulatory approaches ensure only partial protection of natural capital or ecosystem function (

Lemieux et al. 2019;

Zu Ermgassen et al. 2019). For example, streamside riparian buffers play a critical role in protecting production of stream salmonids by ensuring shade, recruitment of large wood essential to pool formation, and maintenance of channel complexity (

Richardson et al. 2010;

Rosenfeld et al. 2024). Riparian buffer widths may be wide enough to ensure close to 100% of reference wood input levels on anadromous fish-bearing streams on public land in some jurisdictions (e.g., Alaska;

Murphy 1995), but elsewhere narrower buffers on small forested streams may retain only 80% of reference levels of large wood inputs (e.g.,

Johnston et al. 2007), indicating a fractional loss of natural capital, i.e., the regulation itself may have an embedded and often cryptic trade-off between economic and environmental values. Complete absence of riparian protection on agricultural streams (e.g.,

British Columbia Ministry of Forests, Lands, and NRO 2019) represents a more extreme but less cryptic failure to protect natural capital by explicit exemption. In addition, beyond these design limitations most regulations also have significant failure rates associated with incomplete compliance, enforcement, or mitigation (

Zu Ermgassen et al. 2019;

Third et al. 2021). For example, a decade after riparian regulations were brought into force in 2005 in the Province of British Columbia, Canada, 17% of local governments were found to be noncompliant (

Office of the Ombudsperson of British Columbia 2022). Consequently, there will almost always be residual impacts of development even with well-intended regulations (i.e., the product of embedded limitations and lack of compliance), again contributing to a potential ratchet effect.

Random errors in parameter estimates are typically equally distributed around the mean if an estimation procedure is without bias, leading to a reasonable expectation that on average management decisions should also be without bias (overestimations equal underestimations). However, in the field of environmental regulation positive and negative errors do not necessarily balance. Type 2 errors (false negatives, where potentially harmful developments are greenlighted) lead to loss of natural capital. In contrast, Type 1 errors (false positives where environmentally benign developments do not proceed) do not result in an equivalent increase in natural capital; at best they result in NNL. Therefore the combined cumulative impacts of neutral Type 1 errors and negative-effect Type 2 errors will always be a directional net loss of natural capital, again supporting a ratchet effect. This circumstance applies equally to EIA (large developments) and application of routine regulatory standards. The potential for ratchet effects will often be compounded by relatively large detection thresholds associated with low statistical power (

Fig. 1). Biological data are notoriously variable, and effect size detection thresholds on the order of 50% of the mean or greater are not uncommon (e.g.,

Steidl et al. 1997), and 25% is often used as a detection threshold in environmental monitoring (

Munkittrick et al. 2009); the implication being that substantial impacts may go undetected, masking potentially significant cumulative effects when aggregated across landscapes.

Offsetting (e.g., restoration to compensate for habitat loss) is widely promoted as a pathway to remedy the negative environmental impacts of development, with variable success (

Quigley and Harper 2006;

Rey Benayas et al. 2009;

Quétier et al. 2014;

Guillet and Semal 2018). If conventional statistics are used to calculate the magnitude of impact that needs to be offset, then the arguments above indicate that most offsetting requirements (i.e., multipliers) will be underestimated. The concept of offsetting is also typically applied to mitigate the impacts of large developments associated with detailed EIAs. However, the logic above makes a strong case that some form of off-setting also needs to accompany routine environmental regulations (e.g., riparian protection legislation), where incomplete compliance generates cumulative impacts that require mitigation to avoid net loss, despite apparently protective regulation.

While concerns are well established that statistical convention setting α ≪ β biases detection of treatment effects, some recent studies have highlighted the opposite effect. Low-probability events that document significant impacts on biodiversity may represent Type 1 errors (false positives), with their significance amplified through selective publication (

Lemoine et al. 2016;

Yang et al. 2022). However, this selection bias is unlikely to be a concern in routine EIA, which are rarely published in the primary literature and therefore not subject to a filter that amplifies significant results.

Statistical interpretation of the precautionary principle

The precautionary principle (often stated as “a lack of full scientific understanding shall not be used as a reason for postponing cost-effective measures to prevent environmental degradation”; Rio Declaration,

UNECP 1992;

Cooney 2004) is frequently promoted as a conservative approach to deal with uncertainty in natural resource management (e.g.,

Raffensberger and Barrett 2001;

Sachs 2011). However, it is often strongly opposed by stakeholders who see it as a mechanism to obstruct development (

Sunstein 2003;

Turner and Hartzell 2004), not the least because it’s meaning and interpretation is poorly defined (

O'Riordan and Jordan 1995;

Foster et al. 2000). Consequently, it sees limited practical application, despite being recognized as a guiding principle in policy and legislation (

Foster et al. 2000).

The preceding discussion on Type 2 errors provides the foundation for an objective interpretation of the precautionary principle, where the goal is not to wield uncertainty as a blanket rationale for obstructing development, but to explicitly correct the inherent biases described above. Practical implementation of this precautionary approach could be challenging, but would be based on explicit recognition of the anticipated rate of false negatives (or other quantifiable biases; e.g.,

Thompson et al. 2000) and the need to eliminate them. For example, if asymmetry in Type 1 and Type 2 errors means that 15 out of 100 developments are false negatives (e.g.,

Fig. 2), then in principle 15% of projects could cause environmental harm and should not proceed or would require additional offsetting. In this example, a precautionary approach at the population or landscape level of multiple development projects could support a goal of identifying the 15% of highest risk projects pre-approval based on information that may have been excluded from initial quantitative screening or pre-impact monitoring, or using alternative weight-of-evidence approaches (

Plowright et al. 2008;

Downing et al. 2010). At the project assessment level, it could mean better balancing Type 1 and Type 2 error (i.e., equal allocation of risk to the environment vs. development interests), either through formal statistics (adjusting α = β;

Mapstone 1995) or by qualitative assessment of acceptable threshold effect sizes. However, even when α and β are equal, any error will still lead to net loss and require some form of additional offset, albeit at a reduced rate. Empirical estimation of EIA error rates (i.e., impact misclassification) would be useful to establish the true frequency and magnitude of error, and could in principle be derived from retrospective comparison of predicted versus realized impacts extracted from pre- and post-impact reporting for a population of project assessments.

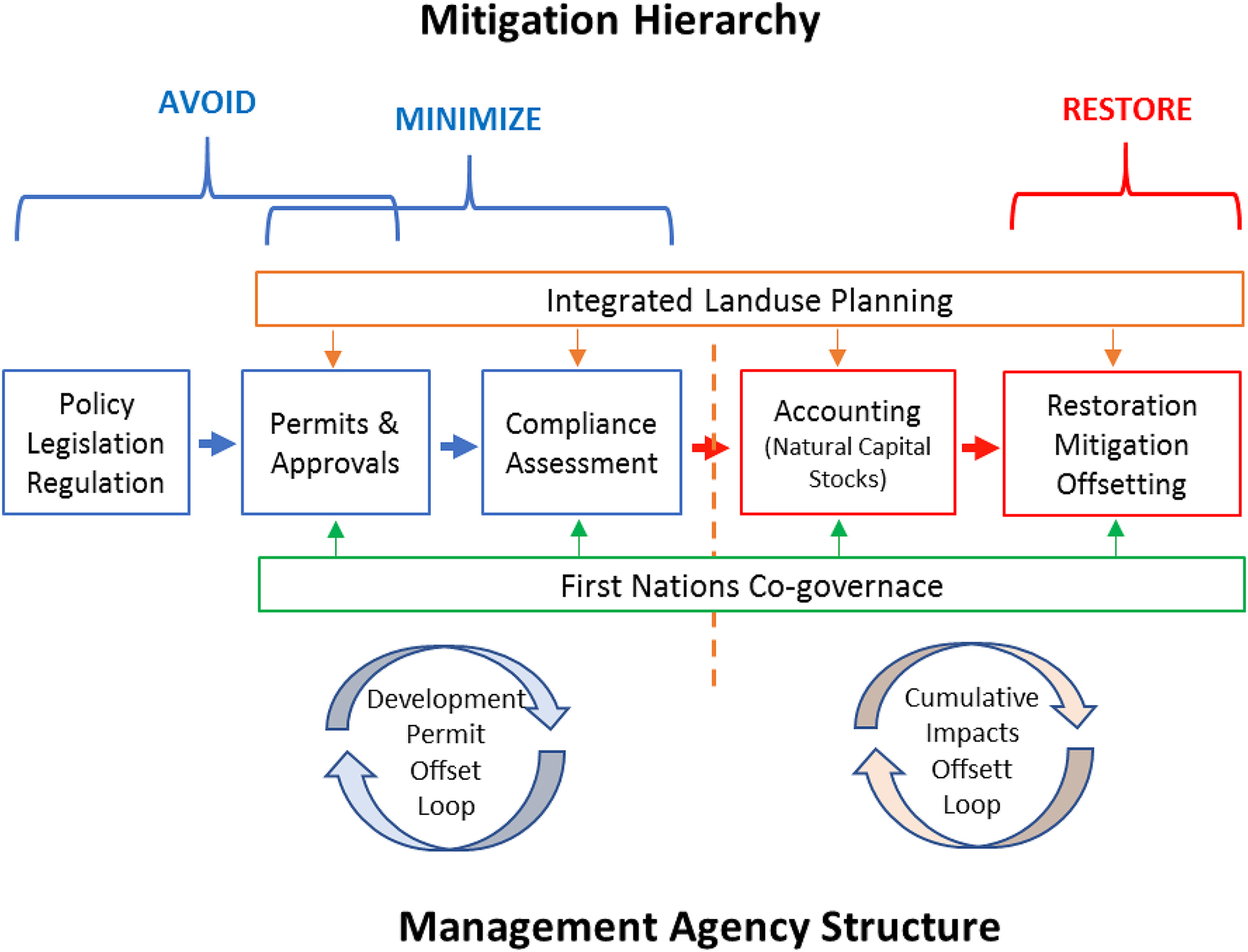

Ultimately, correcting the biases identified above requires some degree of process and institutional change. A shortlist of priority actions to minimize the impacts of regulatory and institutional rachets in environmental governance follows.