Introduction

In Canada, CEAs need to consider “scientific information, Indigenous Knowledge, and community knowledge” as described in the

Impact Assessment Act (s6(1j)

Impact Assessment Act of Canada 2019). CBM projects may be able to draw from all of these knowledge sources, but CBM describes a broad diversity of projects with a wide range of objectives and structures (

Theobald et al. 2015;

Kanu et al. 2016). Some CBM projects are designed and executed by researchers from outside local communities with objectives that are irrelevant to the community (

Danielsen et al. 2008;

Kipp et al. 2019), while others are initiated and conducted solely by local communities for their purposes (

Danielsen et al. 2008). Meaningful participation in monitoring requires that local communities co-design the CBM project, lead its data collection, and have internal uses for the data (

Mahoney et al. 2009;

Wilson et al. 2018;

Reed et al. 2020). These projects are more likely to empower community members, contribute to decision-making, and be more sustainable over time as they garner greater interest and participation from community members (

Kouril et al. 2016;

McKay and Johnson 2017a;

Parlee et al. 2017). Here, we focus on CBM projects in which the data are primarily collected by community members who may have received training or guidance from professional researchers, and the data are used by either the local community and researchers or just the local community.

We aimed to understand if CBM has the potential to enhance the biophysical baseline studies that inform CEA by elevating the participation of local communities and by collecting datasets that meet western science standards. Our focus on western science methods in CBM is not intended to diminish the critical importance of Indigenous science and knowledge; instead, it reflects our positionality as non-Indigenous scientists. While we believe CBM projects can contribute to “scientific information, Indigenous Knowledge, and community knowledge”, we focus here on the potential western science contributions of CBM to the biophysical components of CEA, as this is our area of expertise and experience. As such, we made no attempts to evaluate the ability of projects to include Indigenous Knowledge, and Indigenous Knowledge was not an attribute in our scoring rubric. We also did not attempt to extrapolate whether Indigenous Knowledge was included in a project and only recorded Indigenous Knowledge as a project characteristic when it was explicitly stated in the project documentation.

Our objectives were to evaluate the ability of CBM projects in Canada to collect biophysical data that could support western science approaches to CEA and what (if any) characteristics these projects shared. We developed a rubric to score the ability of CBM projects to contribute data to biophysical baseline studies for CEAs and determined whether the highest-scoring projects had common characteristics. Identifying shared characteristics could support the intentional design of future CBM projects that intend to contribute western scientific data to CEAs while also empowering communities to lead local decision-making processes.

Materials and methods

We conducted the data collection for this study from February to June 2020.

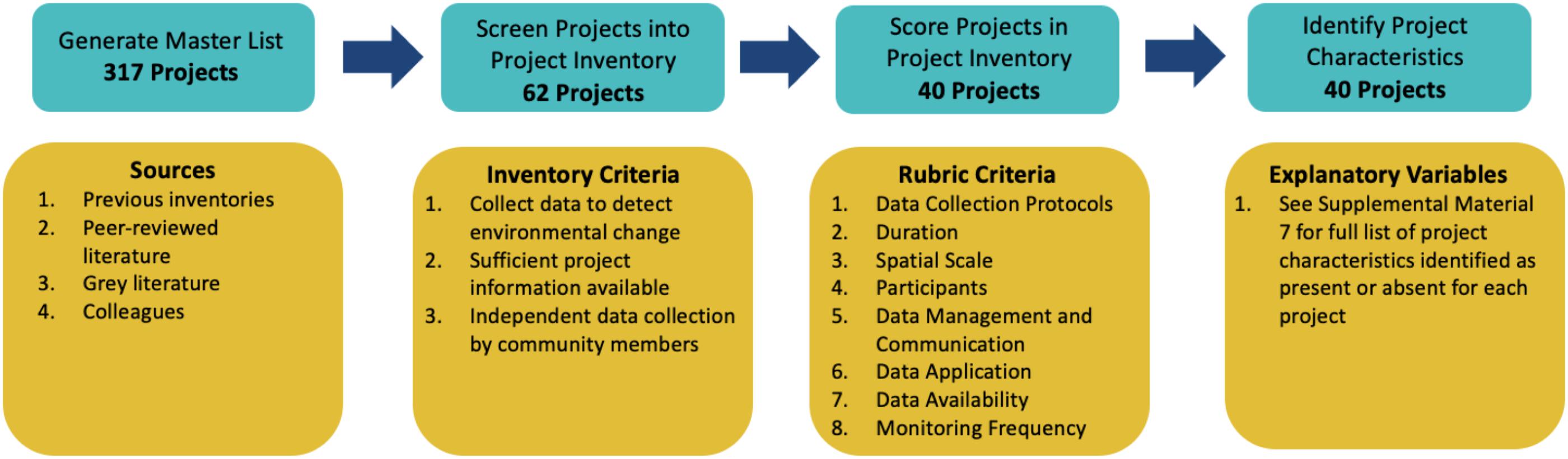

Community-based monitoring (CBM) project inventory

We screened our master list of CBM projects to identify those that met the following criteria: (1) collected data to detect environmental change (i.e., monitored environmental indicators, such as water quality, wildlife abundance, air quality, etc.); (2) sufficient project information was readily available online to allow further assessment (e.g., description of data collection techniques, monitoring location, and project participants); and (3) available information suggested community members collected data independent of researchers. We based this screening on online, project-specific information obtained through Google searches. A review of CBM projects by

Kipp et al. (2019) found that many projects are described as community-based, but the extent of community member involvement varied and was often limited. We narrowed our focus to projects that enabled community members to collect monitoring data without the direct supervision of researchers or professional scientists. For this study, we defined CBM projects as

projects in which the data are primarily collected by community members who may have received training or guidance from professional researchers, and the data are used by either the local community and researchers or just the local community. We created this definition by merging three categories (3, 4, and 5) of CBM projects defined by

Danielsen et al. (2008). For this study,

community members encompass local community members who may or may not be paid to collect data or are members of the general public who volunteer their time. Sixty-two CBM projects in the project inventory met our study criteria (

Fig. 1; Supplementary Material 4; Table S4).

Project scoring

We developed a scoring rubric to evaluate the ability of CBM projects to collect environmental baseline data that could support western science approaches to regional CEAs. We identified eight project attributes that align with design considerations of environmental baseline studies and which address the need for regional CEAs (

Table 1 and

Fig. 1; Supplementary Material 5). These eight project attributes were selected and defined based on recommendations for improving CEA baseline studies from the literature and our collective experience in conducting environmental monitoring programs, including CBM projects. As such, these project attributes reflect our experiences with western science methodologies and do not represent an exhaustive list of potentially useful project attributes. We welcome others to modify the scoring rubric, which we have made available in Supplementary Material 5, based on their knowledge and experiences.

Our selected project attributes included the rigour of data collection protocols, the project duration, the project spatial scale, the number of project participants, how well data were managed, sampling frequency, data application, and data availability. We gave each attribute a score between 1 and 5 (i.e., ordinal scale), with 5 indicating that the project had an exceptional ability to contribute environmental baseline data to regional CEAs. Scores were relative, and thus, “lower” scores did not necessarily indicate that a project could not contribute valuable data to baseline studies for regional CEA; instead, these “lower” scores indicate that other projects either offered or demonstrated evidence of greater capacity or complexity for that particular attribute. For example, monitoring an area of 50 000 km2 would yield valuable data for a regional baseline study, whereas monitoring an area of 500 000 km2 could yield valuable data for several regional studies and allow for comparisons among regions of varying levels and types of human activities that could offer greater insights into cumulative effects. While CBM projects that monitor larger areas do not necessarily collect more robust data, the capacity to monitor larger areas suggests the ability to overcome the greater logistical complexities of monitoring larger areas, which would be very valuable to learn from when considering the design of future CBMs for CEA.

We defined the score categories according to numerical ranges identified during the initial project search or possible options based on project descriptions. We then assigned the cumulative attribute score for each project to one of four score categories based on quartiles: Quartile 1 (project score ≤ 25th percentile), Quartile 2 (project score ≤ 50th percentile and >25th percentile), Quartile 3 (project score ≤75th percentile and >50th percentile), or Quartile 4 (project score > 75th percentile). Projects with higher scores (e.g., Quartile 4 > 3 > 2 > 1) can be interpreted as having a relatively greater ability to contribute environmental baseline data to regional CEAs, based on the information we were able to gather. Below is our rationale for selected project attributes presented in

Table 1, where the scores are defined.

Data collection protocols

This criterion scored the projects based on whether standardized data collection protocols (i.e., a consistent approach to data collection) were consistently used and reported. Data collection methods should follow standardized protocols to collect defensible data that can inform western science approaches to environmental monitoring and decision-making (

Hunsberger et al. 2005;

Conrad and Daoust 2008;

Kouril et al. 2016;

McKay and Johnson 2017a;

Herman-Mercer et al. 2018). Standardized monitoring methods are essential for CEA to know which datasets can be compared across space and time for regional assessments (

Miller et al. 2022). Successful project-level and regional CEA integration requires standardized long-term monitoring methods (

Dubé et al. 2006;

Foley et al. 2017;

Armitage et al. 2019).

McKay and Johnson (2017a) found that government and industry representatives believed the effectiveness of CBM projects depended on “rigorous, robust, and defensible methods”. Furthermore, standardized monitoring approaches facilitate earlier detection of environmental effects and can improve adaptive management (

Roach and Walker 2017).

Project duration

This criterion defines the number of years the project has been collecting monitoring data. Cumulative effects monitoring, assessment, and management are long-term processes (

Hegmann et al. 1999;

ECCC 2016). Long-term monitoring data are needed to inform robust baseline studies that better define natural variability, identify deviations from that variability, and produce more accurate impact prediction models (

Duinker and Greig 2006;

Dubé et al. 2013;

ECCC 2016;

Roach and Walker 2017). Long-term monitoring before and after developments also helps identify when and where mitigative measures are needed and supports adaptive management (

Duinker and Greig 2006;

Dubé et al. 2013;

ECCC 2016;

Roach and Walker 2017). The duration of a CBM project is also an indicator of its success in holding the interest of participants and funding agencies.

The total number of years did not include the time during which the project was designed or any years when the project was on hold (i.e., we only included years when data were collected in the total duration calculation). We did not include 2020 in the duration calculation for ongoing projects, as the sampling period for all projects would not have occurred by the time we completed our study. The duration of projects in the inventory ranged from 1 to 29 years, with a median of 8 years. We used data from an initial project inventory to generate a typical range of project duration. We divided this range into four equal-sized categories, with an additional category representing exceptionally long projects.

Spatial scale

For projects that monitored harvesting data and did not define a study area size, we estimated a 100 km radius (i.e., 31 416 km2) for each community that participated. The size of study areas monitored by the projects in the inventory ranged from 5 to 1 100 000 km2, with a median of 3939 km2.

Project participants

Cumulative effects monitoring, assessment, and management require coordination of activities among multiple participants (

ECCC 2016). CBM projects demonstrating coordination success, particularly the capacity to coordinate numerous communities, are valuable models. This criterion scored the projects based on the number of communities or non-profit groups that routinely participated in the project and was meant to help understand the scope of the logistics and coordination required to operate the project. This criterion was also meant to reflect how many communities/groups can be expected to be involved in one project. We scored some projects based on their number of volunteers because the number of communities or non-profit groups was not provided.

The number of communities or non-profit organizations participating in projects in the inventory ranged from 1 to 35, with a median of 2. We used data from an initial project inventory to generate a typical range of community participants. We used this range to define three equal-sized categories above 1, with an additional category representing abnormally large participant numbers.

Data management and communication

This criterion scored the projects based on data management and protocols that clearly articulated data handling and management procedures for analysis and regularly communicated results. Data management is critical to effective cumulative effects monitoring (

ECCC 2016). Data management systems can improve the integrity of monitoring projects and facilitate effective communication of data to partners, rightsholders, stakeholders, and the public. Data management systems and communication are also essential when data from cumulative effects monitoring are used to develop management actions or responses (

ECCC 2016;

McKay and Johnson 2017a;

Kipp et al. 2019). The score categories were confined to three scores, as we found only three explicit variations in data management.

Monitoring frequency

This score was based on the frequency of data collection for the indicators routinely monitored by the project. If project-specific frequencies varied among indicators or variables, we used the most frequent sampling for the scoring. We used this scoring criterion to understand if data collection protocols included consistent data collection and if the data could capture temporal variability. In addition, this criterion helped identify projects that required greater involvement and commitment by project coordinators and participants throughout the year (i.e., does the project only operate during one month of the year, or does sampling occur throughout the year?).

Some monitoring parameters only need to be sampled annually; however, monthly monitoring is recommended for other variables to understand seasonal variability clearly. As such, programs demonstrating the capacity to collect monitoring data throughout the year are particularly valuable and applicable to CEA. One of the purported benefits of CBM is more robust datasets that include data collected throughout the year (

Gérin-Lajoie et al. 2018). Regardless of timing, samples should be repeatedly collected during specific times of the year to allow robust trend analysis (

Carlsson et al. 2015).

Data application

The purpose of this criterion was to rank the projects based on whether the data were used to inform decisions. Cumulative effects data are meant to be used in environmental policy, management, and decision-making (

ECCC 2016). The hope for CBM is that decision-makers will respect and use the data to inform decisions (

Theobald et al. 2015;

McKay and Johnson 2017a). Some view CBM as an avenue for communicating with communities, while others see it as a data source to inform decisions (

Kanu et al. 2016;

McKay and Johnson 2017a). Hence, it is important to identify whether the data were actually used to inform decisions. For example, the Indigenous Observation Network's data directly informed the Yukon River Watershed Plan (

LLC 2020), so it received a data application score of 5.

Data availability

This scoring criterion was meant to define the availability of data in a form that can be analyzed and interpreted by those not directly associated with the project (e.g., project databases or technical reports that present data tables). Effective regional CEA requires project-level and regional monitoring data to be available to government agencies, project proponents, rightsholders, and stakeholders across the region (

Johnson et al. 2011;

Ball et al. 2013;

Dubé et al. 2013;

Sheelanere et al. 2013;

Foley et al. 2017;

Miller et al. 2022).

ECCC (2016) describes the importance of publicly sharing cumulative effects data for management actions and decision-making. Effective data communication is especially critical when data are meant to be used to develop management actions or responses (

Moyer et al. 2008;

ECCC 2016;

Kipp et al. 2019).

Project characterization

To explore whether high-scoring projects shared distinct characteristics, we identified an a priori suite of project characteristics (i.e., explanatory variables) that we hypothesized would influence project scores (

Table 2). We selected project characteristics based on key variables identified in the literature and our own experiences (Supplementary Material 6). We treated characteristics as binary explanatory variables depending on whether the characteristic was present or absent, as explicitly reported in the publicly available project information.

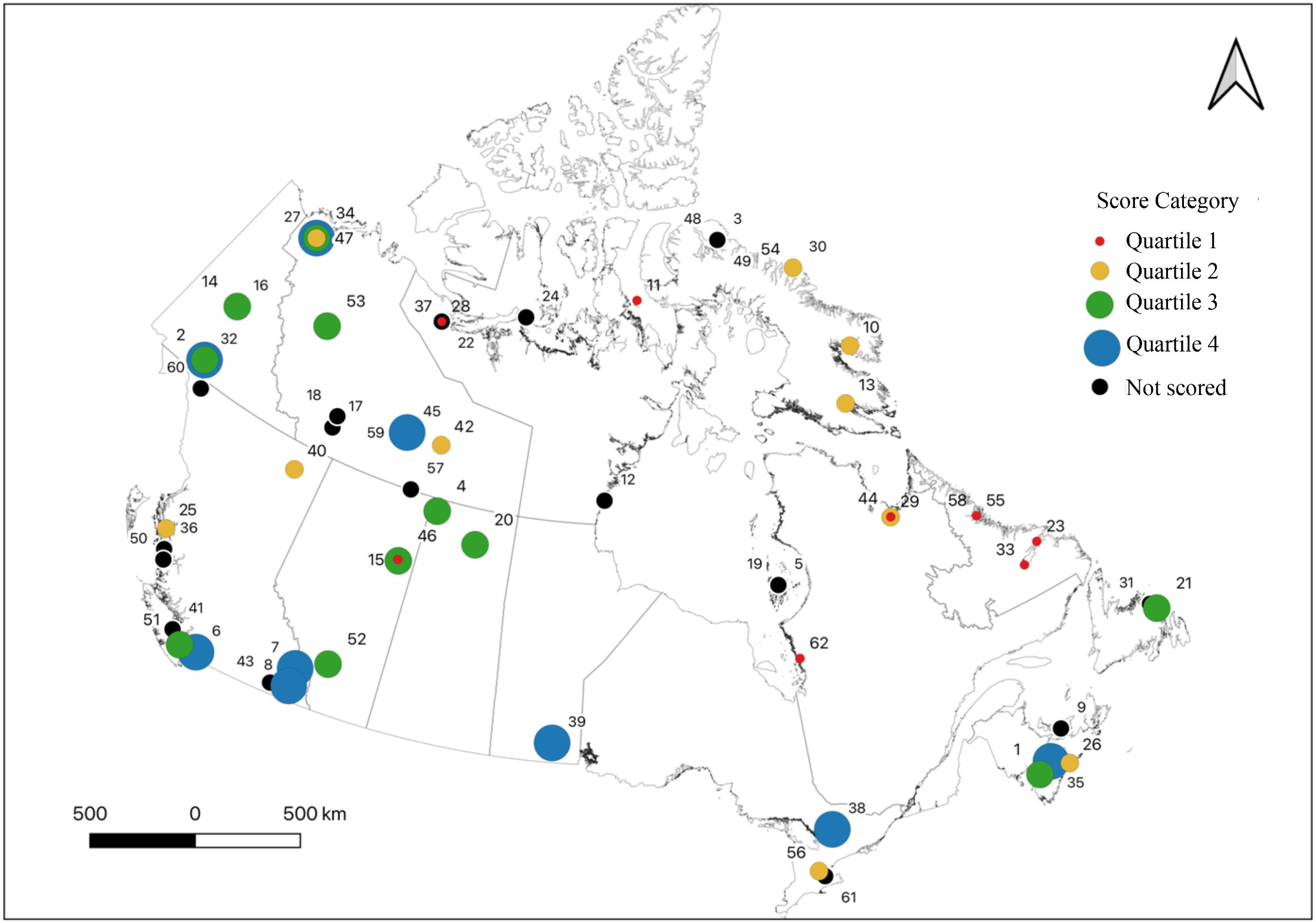

We scored and characterized 40 randomly selected projects from the 62 projects in the CBM Project Inventory (

Fig. 1). The majority (58%) of the 62 projects monitored study areas in northern Canada (Nunavut [NU], Yukon Territory [YT], Northwest Territories [NT], Nunavik, and Nunatsiavut;

Fig. 2). To achieve a reasonable representation of projects across Canada, we randomly sampled up to six projects from each province, territory, or northern region (i.e., Nunavik and Nunatsiavut). This criterion ensured we would select at least ten projects from the southern Canadian jurisdictions (British Columbia [BC], Alberta [AB], Saskatchewan [SK], Manitoba [MN], Ontario [ON], Quebec [QC; outside Nunavik], New Brunswick [NB], Nova Scotia [NS], Prince Edward Island [PE], and Newfoundland and Labrador [NL; outside Nunatsiavut]).

We only used publicly available information to score and characterize each project. If the available project information did not report sufficient detail to assign a score for any criterion, we classified the project as having insufficient information, and another project was randomly selected from the inventory. Seven of the projects we randomly selected from the inventory we found had insufficient project information. We included scoring rationales as comments in the spreadsheet that we cross-referenced with the rubric description before analysis (Supplementary Material 7; Table S7).

Cross-validation

We undertook two rounds of cross-validation to ensure that our rubric scoring was reproducible (Supplementary Material 8). The reproducibility of our rubric was critical to ensuring reviewer bias did not influence project scoring and that scoring methods were transparent enough to allow others to iterate on them in the future. We encourage others to adapt and refine our rubric and improve its accuracy with new information.

Three authors individually evaluated 10 projects using the same project information and following the instructions in the rubric (data dictionary; Supplementary Material 7). We held discussions to identify the cause of all disagreements in project scores and updated the rubric to reduce inconsistency. We then completed a second cross-validation using the updated rubric. We calculated interrater agreement (i.e., Krippendorff's alpha coefficient [

α]) for each project attribute and characteristic following the methods described in

Roche et al. (2015).

Cicchetti (1994) suggests that

α values <0.4 indicate poor agreement. We carefully evaluated the potential causes of the low interrater agreement for any attribute or characteristic with a score <0.4. We agreed to data dictionary changes, often combinations of categories, that would further improve the interrater agreement score. All remaining project attributes and characteristics had an interrater agreement of ≥0.4, which we interpreted as an indication that our rubric was sufficiently reproducible to move forward.

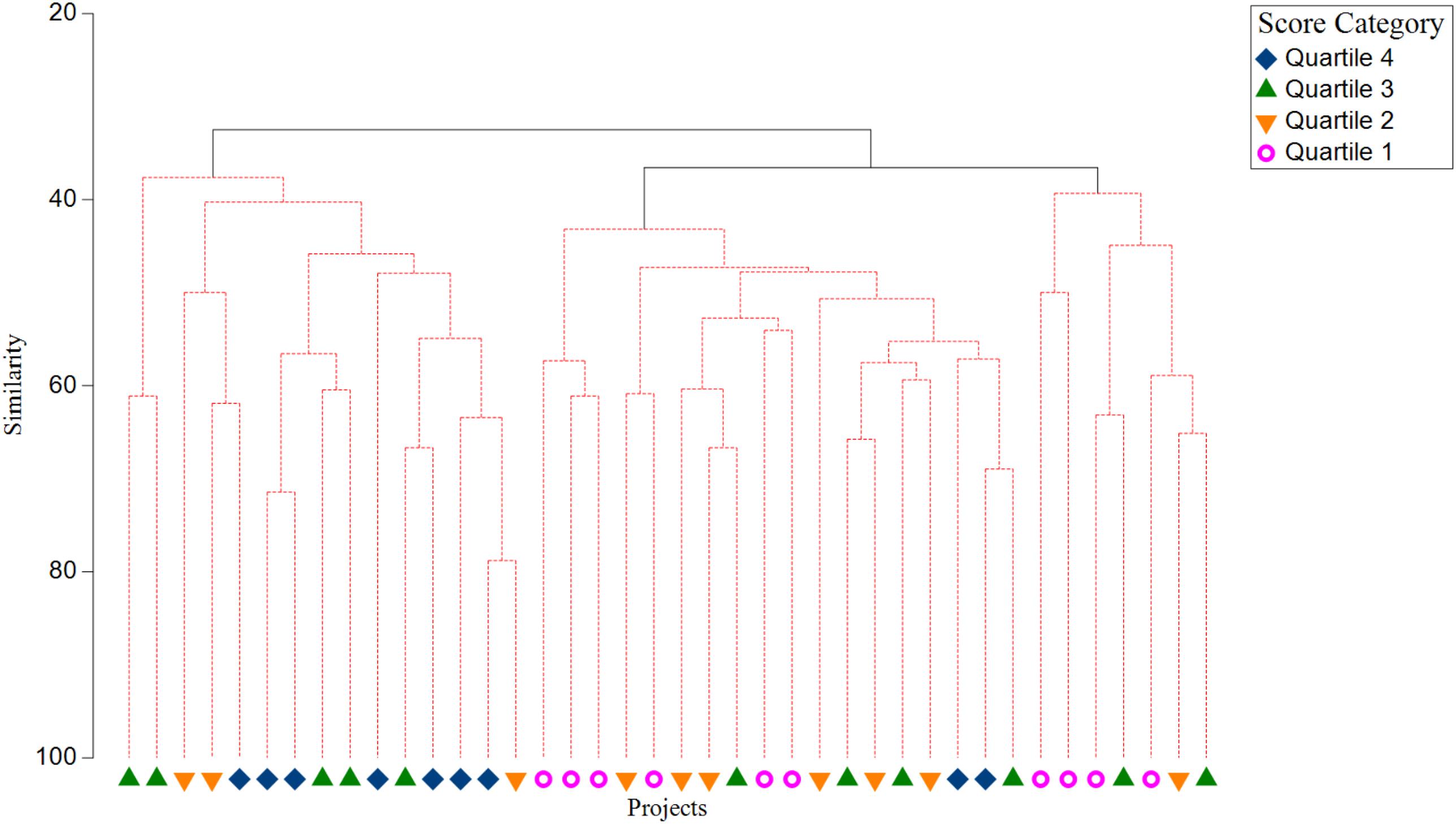

Data analysis

We used unconstrained ordination to evaluate if projects within the same score category were grouped based on a common set of project characteristics. We generated a Bray–Curtis similarity matrix (composed of similarities calculated between each pair of projects) as the basis for the multivariate analyses. We performed a hierarchical agglomerative cluster analysis using a group average linkage to determine if the score categories were separated into the same clusters based on common project characteristics. We assigned the score category (e.g., Quartile 1, Quartile 2) as a label in the cluster analysis to visualize if groupings were associated with the a priori categories. We used a similarity profile (SIMPROF) test to identify which groups produced by the cluster analysis were meaningful (

Clarke et al. 2014). We set the significance of the SIMPROF test at 5% and permutations at 9999.

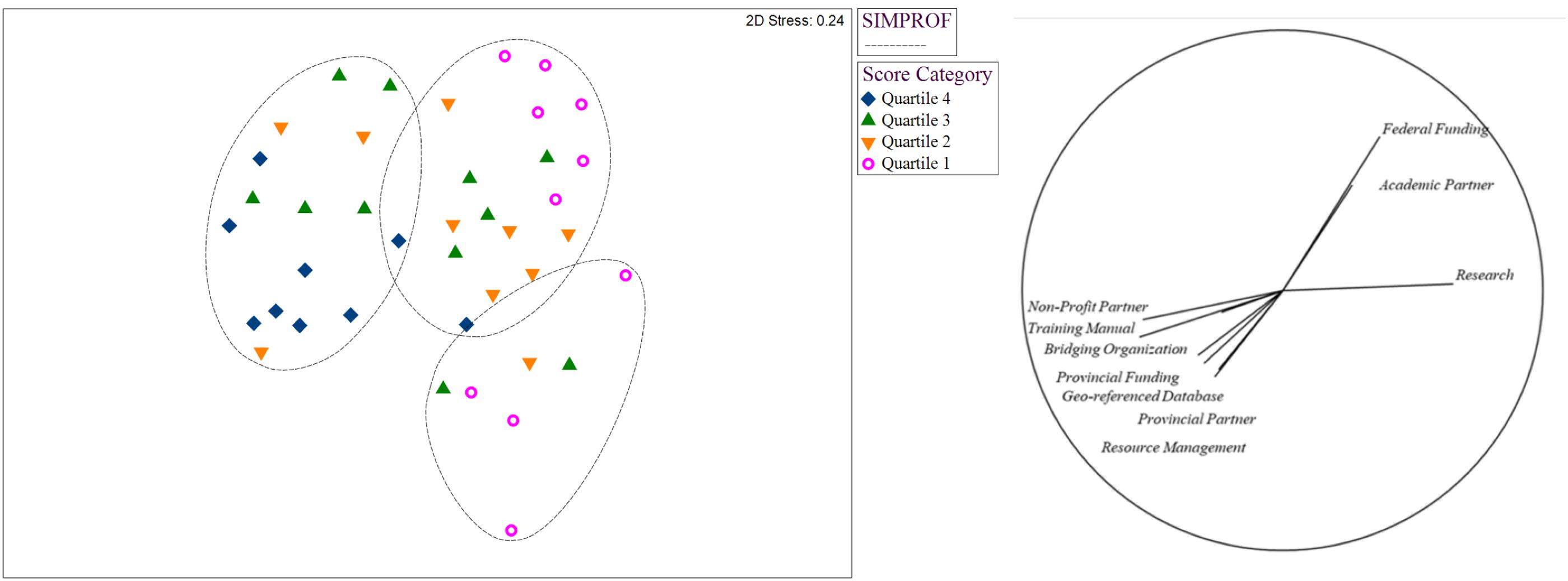

We used non-metric multi-dimensional scaling (nMDS) ordination to portray the differences between score categories to evaluate if score categories contained unique suites of project characteristics. We displayed the ten project characteristics that had the most significant influence on dissimilarity calculations between score categories on a vector plot adjacent to the nMDS ordination. We used the similarity percentages routine (SIMPER) to assess which project characteristics influenced dissimilarities between score categories and similarities within score categories. We used PRIMER version 7® software to complete all analyses.

Results and discussion

The cumulative effects of climate change and human development continue to exacerbate environmental degradation and threaten environmental sustainability. Canada needs to improve CEA methodology to secure ecosystem sustainability for future generations (

Seitz et al. 2011;

Ball et al. 2013;

Sheelanere et al. 2013;

Cronmiller and Noble 2018). CEAs should consider scientific information, Indigenous Knowledge, and community knowledge. While it is likely that CBM could contribute to all these components of CEAs, here we explored the degree to which current CBM projects appear prepared to contribute to the western science component of CEA biophysical baseline studies. Our objectives were to evaluate the ability of CBM projects in Canada to collect environmental data that could support western science approaches to CEA and what (if any) characteristics high-scoring CBM projects shared to inform the design of future CBM projects (

Hunsberger et al. 2005;

Lawe et al. 2005;

McKay and Johnson 2017a;

Gérin-Lajoie et al. 2018). We found that higher scoring projects shared common characteristics (

Table 3) that we propose should be considered when designing future CBM projects with the objective of supporting CEA.

Our evaluation of current CBM projects is novel in that we employ qualitative, semiquantitative, and quantitative components and decisions. We hope this approach catalyzes more efficient design and deployment of future CBM projects. Previous work that reviewed and assessed CBM project information used surveys, interviews, and content analyses to collect project information and presented tables and case studies to summarize key project attributes (e.g.,

Danielsen et al. 2005;

Conrad and Daoust 2008;

Gofman 2010;

Johnson et al. 2016;

Kouril et al. 2016;

Parlee et al. 2017;

Raygorodetsky and Chetkiewicz 2017;

McKay and Johnson 2017b;

Kipp et al. 2019). These previous approaches identified common characteristics among CBM projects and discovered from practitioners which attributes contribute to project success and sustainability. While our goal was similar to these previous studies, we assessed if higher-scoring projects shared common characteristics using multivariate statistical analyses applied to a scoring rubric. We took this approach because (i) developers of policies and practices often prefer quantitative evidence in the design of western science (including CBM) projects and (ii) it facilitates future iterations, comparisons across iterations, and improvements on our methods. In the future, the collation of CBM project information could be expanded by requesting further information from CBM project managers, further cross-validation and modification of scoring criteria and project characteristics, and adding additional CBM projects to these lists. The project scoring spreadsheet, data dictionary, and PDFs of all project information used to inform this analysis are available as Supplementary material. Thus, the evaluations made here are presented in a transparent format and can be reassessed, adapted, and corrected at any time. In addition, others looking to achieve a different objective (e.g., answer a different question) can use our project list as a snapshot of CBM projects in Canada and develop new approaches for project evaluations.

Our analysis had an unavoidable circularity (i.e., we used the same information to score the projects and identify the presence of explanatory variables). We scored projects based on publicly available documentation. We then used this same documentation to determine whether there were any shared characteristics among score categories. We separated project characteristics from project scoring as much as possible in our rubric. Nonetheless, it is important to highlight that our analysis identifies suites of commonly shared CBM project characteristics rather than directional relationships between characteristics and scores. It may be useful to identify common associations, for example, the use of a bridging organization and the conduct of CBM with a larger number of communities and participants, but understanding the causality of these associations would require further research. The scope of this analysis (i.e., 317 potential projects screened; 40 projects scored and evaluated in detail) necessitated a focus on generally available documentation and associations between practices and characteristics. This focus may have disproportionately affected our understanding of small-scale, locally focused projects, especially those that focused on Indigenous Knowledge.

The cluster analysis separated CBM projects into three significant groups according to the SIMPROF test (

Fig. 3). The first significant branching of the dendrogram separated one cluster containing seven (78%) Quartile 4 projects. The other cluster contained all 10 Quartile 1 projects and seven (70%) Quartile 2 projects. The Quartile 3 projects were almost equally divided into the two branches of the dendrogram (five vs. six). A further significant division in one branch grouped six (60%) Quartile 1 projects with six (60%) Quartile 2 projects, three (27%) Quartile 3 projects, and two (22%) Quartile 4 projects. The final cluster appeared to be an intermediate or alternative grouping that contained four (40%) Quartile 1, one (10%) Quartile 2, and two (18%) Quartile 3 projects. These dendrogram branches suggested project characteristics differed between the Quartile 4 and Quartile 1 score categories, while the Quartile 2 and Quartile 3 projects were less distinct.

The nMDS ordination plot presented the Quartile 4 projects as clustered on the left side of the plot, whereas the Quartile 1 projects clustered on the right side (

Fig. 4). A secondary pattern further distinguished several Quartile 1 projects in the plot's lower right quadrant. The ordination's high stress (i.e., > 0.2) indicated that the points were close to being arbitrarily placed in two-dimensional space. However, the cluster analysis supported the general conclusion that the three groups were distinct (

Clarke et al. 2014). We examined the three-dimensional plot (stress 0.17) to ensure that the Quartile 4 and Quartile 1 projects were generally clustered separately. After conducting the nMDS, we restricted further investigation to the Quartile 1 and Quartile 4 projects, as there appeared to be no discernible difference in characteristics between the Quartile 2 and Quartile 3 projects.

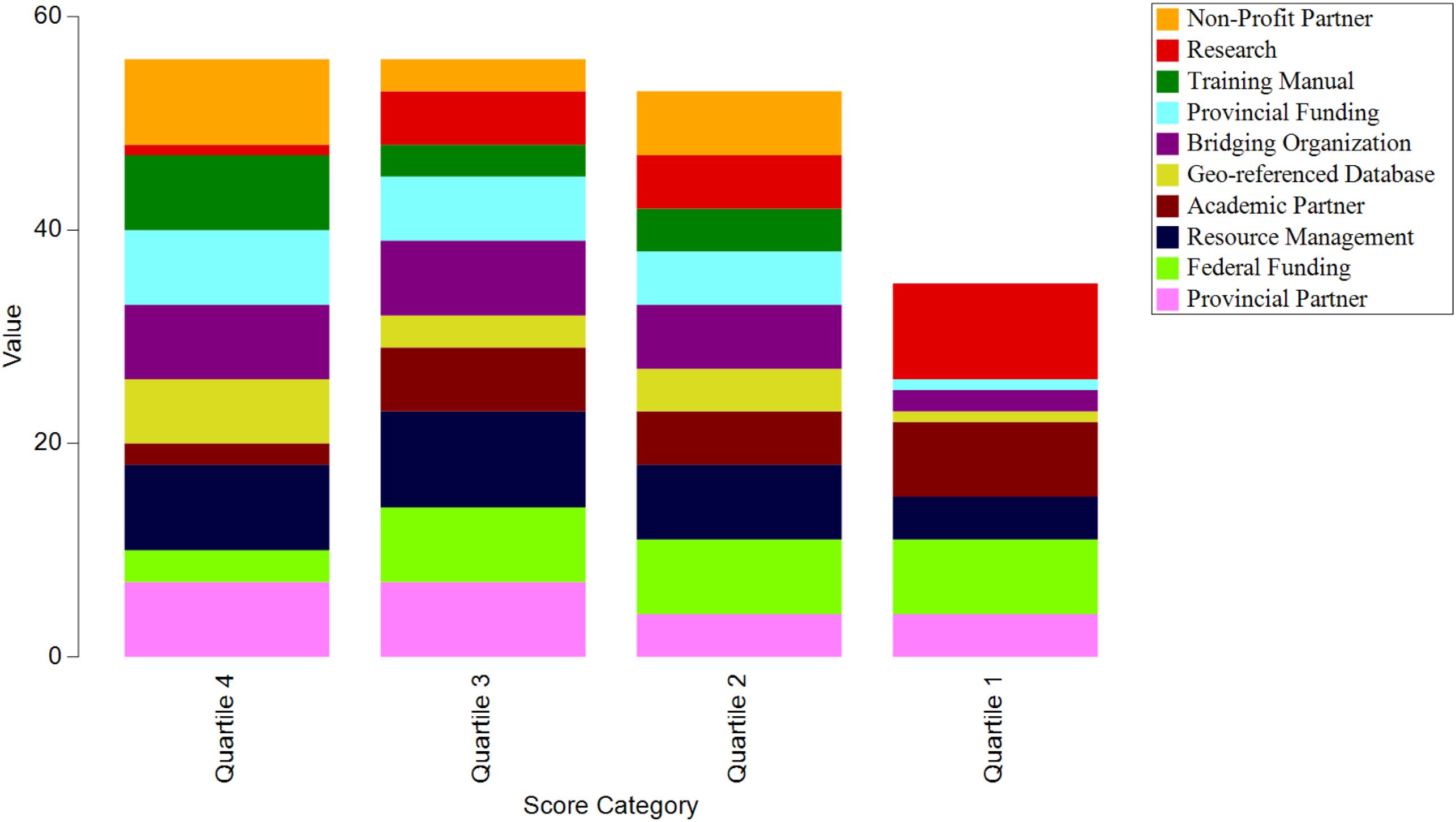

Several identified shared characteristics could help support the intentional design of future CBM projects. Results of the SIMPER analysis are in

Tables 3–

5,

Figs. 4 and

5, and Supplementary Material 9. The vector plot adjacent to the nMDS displayed the 10 most influential project characteristics identified by the SIMPER analysis (

Fig. 4). We found that CBM projects in Quartile 4 (highest scoring projects) shared key characteristics that were uncommon in the Quartile 1 projects (

Figs. 4 and

5 and

Table 4). Quartile 4 project participants followed standardized data collection protocols described in training manuals to collect measurements and samples in the field. Communities and project partners used project data to create baseline datasets and inform resource management decisions. These projects typically received funding and in-kind support from provincial/territorial government agencies. Raw data were publicly available through georeferenced databases that were often created and managed by a third party. These projects were typically administered by, or partnered with, non-profit organizations that typically acted as the bridging organization to coordinate community member involvement in the project.

Training materials

The support framework provided by training materials was positively associated with the Quartile 4 projects. All Quartile 4 projects described training community members to collect quantitative data through sample collection and field measurements, with 89% documenting their standardized monitoring protocols in training materials (e.g., manuals, videos, and online courses). None of the Quartile 1 projects reported using training materials. Standardized data collection methods can help CBM projects inform environmental decision-making (

Hunsberger et al. 2005;

Conrad and Daoust 2008;

Kouril et al. 2016;

McKay and Johnson 2017a;

Herman-Mercer et al. 2018). Training has been identified as a critical component of CBM projects that aim to collect data comparable to that collected by professional scientists (

Fore et al. 2001;

Sharpe and Conrad 2006). The use of training materials may enable participants to collect data over long time periods without the high costs associated with routine in-person training sessions. Training materials may also facilitate training for the larger number of project participants that typified the Quartile 4 projects. Finally, these training materials provided concrete evidence of the standardized protocols used in quantitative data collection, which may have encouraged more decision-makers to use the data (

Kouril et al. 2016;

McKay and Johnson 2017a), thereby increasing a project's data application score.

Project objectives

The Quartile 4 projects had objectives linked to resource management decision-making, whereas the Quartile 1 projects more often had objectives related to research (

Fig. 4 and

Table 5). Resource management project objectives were also associated with higher Data Application scores, as all Quartile 4 projects collected data to inform resource management decisions or environmental assessment, and many (67%) specifically aimed to generate baseline datasets. Projects with a resource management objective had a median data application score of 5. Conversely, Quartile 1 projects were typically research-focused and may not have sought to link results to decision-making directly. Note that this is not a weakness of Quartile 1 projects, just a difference in objectives. Projects with research-oriented objectives and no clear resource management objective had a median data application score of 3. Linking data to decision-making has been previously identified as a major contributor to CBM project sustainability (

Kouril et al. 2016;

Parlee et al. 2017;

McKay and Johnson 2017b). CBM projects designed to inform resource management decisions could be more attractive to project participants and funding agencies, leading to longer project durations and higher project participation rates (

Danielsen et al. 2005;

Carlsson et al. 2015;

Kouril et al. 2016;

McKay and Johnson 2017b). Projects that scored ≥4 for project duration had a median data application score of 5, and projects that scored ≥4 for project participants had a median data application score of 4. Thus, high project duration and project participant scores were associated with projects with a high data application score.

Funding

We also observed an association between project objectives and project funding and partnerships. The Quartile 4 projects’ objectives of collecting resource management and baseline data were often associated with funding and partnerships with provincial/territorial governments. In contrast, the Quartile 1 projects’ objective of research was often associated with federal funding. Federal research funding may less frequently target large-scale and long-term projects, reducing project duration and project participant scores. Projects that received federal funding—and no provincial/territorial funding—had median project duration and project participant scores of 2. Federal funding sources may also target projects with objectives not typically associated with CEA (e.g., research, community capacity building, fostering community connections to the land, food security, etc.). In contrast, provincial and territorial sources of project funds are often targeted at resource management objectives since these are frequently matters of provincial/territorial jurisdiction.

Data availability, accessibility, and management

Provincial/territorial government funding was frequently associated with publicly available data, resulting in projects with high data availability scores. We found that the majority (63%) of the projects we scored with provincial/territorial funding also allowed public data access. Since 2011, Canadian provincial/territorial governments have increasingly recognized the importance of open data (e.g.,

GO 2021;

GBC 2022;

GNWT 2022). Thus, provincial/territorial funding could have placed stricter requirements on project data availability. It is also possible that provincial/territorial agencies can provide more support to CBM organizations in meeting their data accessibility requirements than federal funding agencies, and provide data-sharing platforms that are highly accessible. In addition, provincial/territorial governments often employ technical personnel in regional offices with considerable experience working with community-based groups. Note that public data access is not always desirable for CBM projects (e.g., projects that involve protected or sensitive information, such as highly valued sites and Indigenous Knowledge, or seek to advance entirely local monitoring objectives).

The Quartile 4 projects generally provided public data access through georeferenced databases (i.e., sampling locations displayed on online maps). Georeferenced databases provide interactive maps that make quantitative and qualitative data accessible to non-technical users (

Gérin-Lajoie et al. 2018). These databases may be built-for-purpose or third-party databases. Our findings are consistent with recommendations made in the literature regarding the use of digital databases (

Hunsberger et al. 2005), as the long-term sustainability of CBM projects depends on data being managed to facilitate analysis and communication of results (

Eamer 2011) and provide data access to project partners and decision-makers (

McKay and Johnson 2017b). The Quartile 4 projects offered excellent examples of the rich data that can be easily accessible to support CEA and other applications, such as research and resource management—potentially increasing their data application score.

Bridging organizations

The participation of bridging organizations was also common in the Quartile 4 projects (78%). In this context, a bridging organization is a non-profit organization, private consultant, or co-management organization tasked with coordinating community member involvement in the project. Bridging organizations act as the liaison between external researchers and community participants to ensure ongoing community engagement, expand opportunities for knowledge exchange, and build trust among project partners (

Carlsson et al. 2015;

Kouril et al. 2016;

Wilson et al. 2018). The bridging organizations for our Quartile 4 projects were primarily non-profit organizations, with one case of a local co-management agency. Non-profit organizations may have been instrumental in sustaining relationships with and coordinating the larger number of project participants in the Quartile 4 projects. The median project participant score for projects without bridging organizations was 2. The Gwich'in Harvest Study was the only project in the Quartile 4 score category to report a co-management agency as the bridging organization (

Raygorodetsky and Chetkiewicz 2017). It involved the smallest number of project participants (four communities with multiple participants per community) among the Quartile 4 projects. While coordinating a CBM project across four communities would already be logistically challenging, the other Quartile 4 projects engaged with even more communities (21–31) or a considerable number of volunteers (14–600) or non-profit groups (6–10). All these projects involved non-profit bridging organizations, suggesting that these kinds of organizational structures could benefit current and future CBM projects that aim to involve more communities and participants not tied to a single local government.

Other project attributes and considerations

We found the majority (67%) of Quartile 4 projects were situated in southern and western Canada, while the majority (80%) of Quartile 1 projects were in northeastern Canada (Nunavut, Nunavik, and Nunatsiavut;

Fig. 2 and

Table 6). This geographic pattern may reflect a non-uniform distribution of CBM project objectives throughout the country. For example, CBM projects in southern and western Canada frequently reported resource management as a project objective (74%), and they often shared data publicly (79%). Conversely, CBM projects in northeastern Canada frequently reported research (100%) or health and safety (50%) as project objectives. These projects emphasized local communication efforts more; public data access was only reported by 8%. It is important to note that we scored the data availability of projects that published project information in scientific journals as 3/5 since the raw data were not publicly available and the release of the project findings depended on publication timelines. CBM projects in northeastern Canada may less frequently publish detailed project information due to project collaborations, the presence of sensitive data, or foundational data objectives that do not extend to general public use.

This geographic pattern reflects a fundamental limitation of our study: our analysis depended on project data that were available online. Our master list is not an exhaustive list of all CBM projects within Canada, although we strived to create a comprehensive list. Our compilation of CBM projects was biased toward projects known by our respective networks, projects discussed in the peer-reviewed literature, projects with online documentation, and projects included in previous CBM project reviews. In addition, we only used project information readily available online to evaluate the projects. This approach under-represents CBM projects that do not target reporting to a public audience (e.g., those focused on reporting in small communities or regarding sensitive information) and thus have a small online footprint. Verifying details from project managers or participants through interviews should more accurately reflect the structures and procedures of CBM projects that do not target a public audience.

CBM projects with restricted public data access would have scored low for data availability. Some projects—particularly those that involve Indigenous Knowledge—were less likely to report public data access, for very good reasons related to ownership, control, access, and possession (OCAP;

FNIGC 2023) and Indigenous data sovereignty. We identified Indigenous Knowledge as a project characteristic only for projects that explicitly described the use of Indigenous Knowledge for a particular project stage (e.g., design, data collection). It is important to note that not all Indigenous-led projects explicitly describe the incorporation of Indigenous Knowledge into their projects. As such, the Indigenous Knowledge use project characteristics do not identify all Indigenous-led projects in our inventory. The average data availability score for the projects that explicitly reported Indigenous Knowledge was 3/5, suggesting data sharing in these projects was less frequently directed towards a public audience. Restricted data sharing and access was likely due to sensitivities surrounding sharing Indigenous Knowledge publicly and ownership of Indigenous Knowledge by the community (

Gérin-Lajoie et al. 2018;

Thompson et al. 2020). It is important to note that these are not project weaknesses; they are often features of design. Since our study depended solely on online project documentation, projects with data sharing protocols that aimed to protect Indigenous sovereignty (including data sovereignty) would likely score lower for project attributes such as data collection protocols and data management, which only reflects the fact that this study was aimed at CBM efforts that were directed toward western science, where open data are now often a requirement.

Projects oriented towards a local audience that are collecting and protecting sensitive data may be able to contribute to CEA in many ways (e.g., describing baseline biophysical or socioeconomic conditions and providing different forms of knowledge), but this is not captured by our rubric based solely on publicly available documentation and scoring that is focused on western scientific methods. This is an important gap to recognize with our rubric because Indigenous Knowledge is widely acknowledged as essential for environmental monitoring and effective CEA by contributing a holistic understanding of the socio-ecological systems, past, present, and future (

Gofman 2010;

ECCC 2016;

Johnson et al. 2016;

Kouril et al. 2016;

McKay and Johnson 2017b). While Indigenous Knowledge is critical to effective CEA, individual project managers must address the project-specific sensitivities surrounding sharing Indigenous Knowledge publicly and ownership of Indigenous Knowledge by its community; each community and the individuals who choose to share their Indigenous Knowledge will need to play an equitable role in CEA (

Gérin-Lajoie et al. 2018;

Thompson et al. 2020). Among many other reconciliatory actions that include respect for Indigenous sovereignty, impact assessment and CEA processes must evolve to facilitate meaningful participation of Indigenous peoples in both the western science and Indigenous science aspects of assessments (

Arsenault et al. 2019;

Eckert et al. 2020). Thus, it is imperative that improvements to western science approaches to CEA include avenues for meaningful participation by Indigenous peoples and opportunities to be informed by Indigenous Knowledge. Future work could build on our study by focusing on the CBM project characteristics that are important for Indigenous-led CBM. A study of Indigenous-led CBM in Canada could use our project list as a starting point and focus on the projects we identified as having explicitly included Indigenous Knowledge (see Supplementary Material 7; Table S7).

It is important to note that the CBM projects included in our study had various objectives independent of contributing to CEA. Our assessment of the ability of each project to contribute environmental monitoring data to baseline studies for CEAs was not an evaluation of project quality or efficacy in fulfilling their stated and original objectives. Furthermore, we base project scoring entirely on available documentation. Newly available documentation could describe project practices in greater detail, which could result in score changes. We generated our project scores using an ordinal scale rubric, resulting in relative project rankings. Thus, projects that did not receive the highest scores (Quartile 1–3 projects) may be able to contribute valuable baseline data to CEAs but were simply ranked lower than other projects that demonstrated exceptional ability (Quartile 4 projects) as per our score criteria. Despite these limitations, we think that quantitative identification of characteristics shared by high-scoring projects can advance the design of future effective CBM projects.